| |

And a reminder to UK porn viewers, don't believe the BBFC's fake assurances that your data 'should' be kept safe. Companies regularly sell, give away, exploit, lose and get it stolen, and of course, hand it over to the authorities

|

|

|

| 30th March 2018

|

|

| See article from mashable.com |

The athletic apparel company Under Armour has announced a massive data breach affecting at least 150 million users of its food and nutrition app MyFitnessPal. On March 25, the MyFitnessPal team became aware that an unauthorized party acquired data

associated with MyFitnessPal user accounts in late February 2018, reads a press release detailing the breach. The investigation indicates that the affected information included usernames, email addresses, and hashed passwords - the majority with the

hashing function called bcrypt used to secure passwords. There is one other bit of good news: It looks like social security numbers and credit cards were not stolen in the digital heist. |

| |

So when even the most senior internet figures can't keep our data safe, why does Matt Hancock want to force us to hand over our porn browsing history to the Mindgeek Gang?

|

|

|

| 29th March 2018

|

|

| 20th March 2018. See article from

dailymail.co.uk

See The Cambridge Analytica scandal isn't a

scandal: this is how Facebook worksfrom independent.co.uk |

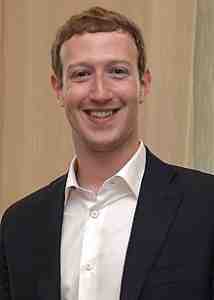

The Culture Secretary has vowed to end the Wild West for tech giants amid anger at claims data from Facebook users was harvested to be used by political campaigns. Matt Hancock warned social media companies that they could be slapped with new

rules and regulations to rein them in. It comes amid fury at claims the Facebook data of around 50 million users was taken without their permission and used by Cambridge Analytica. The firm played a key role in mapping out the behaviour of

voters in the run-up to the 2016 US election and the EU referendum campaign earlier that year. Tory MP Damian Collins, chairman of the Culture select committee, has said he wants to haul Mark Zuckerberg to Parliament to explain himself. Hancock said:

Tech companies store the data of billions of people around the world - giving an unparalleled insight into the lives and thoughts of people. And they must do more to show they are storing the data responsibly.

Update: They'll have to put a price on his head if they want Zuckerberg hauled in front of a judge 28th March 2018. See

article from theguardian.com Mark Zuckerberg has turned down the request

to appear in front of the a UK parliamentary committee for a good grilling. In response to the request, Facebook has suggested one of two executives could speak to parliament: Chris Cox, the company' chief product officer, who is in charge of the

Facebook news feed, or Mike Schroepfer, the chief technology officer, who heads up the developer platform. The Culture select committee chair, Damian Collins said: It is absolutely astonishing that Mark

Zuckerberg is not prepared to submit himself to questioning in front of a parliamentary or congressional hearing, given these are questions of fundamental importance and concern to his users, as well as to this inquiry. I would certainly urge him to

think again if he has any care for people that use his company's services.

Update: Pulling the big data plug 29th March 2018. See

article from reuters.com Facebook said on Wednesday it would end its

partnerships with several large data brokers who help advertisers target people on the social network. Facebook has for years given advertisers the option of targeting their ads based on data collected by companies such as Acxiom Corp and Experian PLC.

Facebook has also adjusted the privacy settings on its service, giving users control over their personal information in fewer taps. This move also reflects new European privacy laws soon to come in force. Update:

Facebook's listening 29th March 2018. See article from

dailymail.co.uk

Christopher Wylie, the whistle blower who revealed lots of interesting stuff about Facebook and Cambridge Analytica, has been speaking to Commons Digital, Culture, Media and Sport Committee about what Facebook gets up to. He told the committee

that he believes the social media giant is able to decipher whether someone is out in a crowd of people, in the office or at home. Asked by Conservative MP Damian Collins whether Facebook can listen to what people are saying to shape their

advertising, Wylie said they use the smartphone app microphone for environmental purposes. My understanding generally of how companies use it... not just Facebook, but generally other apps that pull audio, is for

environmental context. So if, for example, you have a television playing versus if you're in a busy place with a lot of people talking versus a work environment. It's not to say they're listening to what

you're saying. It's not natural language processing. That would be hard to scale.

It is interesting to note that he said companies don't listen into conversations because they can't for the moment. Butt he explanation is phrased such

that they will listen to conversations just as soon as the technology allows. |

| |

The BBFC consults about age verification for internet porn, and ludicrously suggests that the data oligarchs can be trusted with your personal identity data because they will follow 'best practice'

|

|

|

| 26th March 2018

|

|

| From bbfc.co.uk |

Your

data is safe with us.

We will follow 'best practices', honest!

|

The BBFC has launched its public consultation about its arrangements for censoring porn on the internet. The document was clearly written before the Cambridge Analytica data abuse scandal. The BBFC gullibility in accepting the word of age

verification providers and porn websites, that they will look after your data, now rather jars with what we see going on in the real world. After all European data protection laws allow extensive use of your data, and there are absolutely no laws

governing what foreign websites can do with your identity data and porn browsing history. I think that under the current arrangements, if a Russian website were to hand over identity data and porn browsing history straight over to the Kremlin

dirty tricks department, then as long as under 18s would be prohibited, then the BBFC would have to approve that website's age verification arrangements. Anyway there will be more debate on the subject over the coming month. The BBFC

writes: Consultation on draft Guidance on Age-Verification Arrangements and draft Guidance on Ancillary Service Providers Under section 14(1) of the Digital Economy Act 2017, all providers of online

commercial pornographic services accessible from the UK will be required to carry age-verification controls to ensure that their content is not normally accessible to children. This legislation is an important step in making the

internet a safer place for children. The BBFC was designated as the age-verification regulator under Part 3 of the Digital Economy Act 2017 on 21 February 2018. Under section 25 of the Digital Economy Act

2017, the BBFC is required to publish two sets of Guidance: Guidance on Age-verification Arrangements and Guidance on Ancillary Service Providers . The BBFC is now holding a public consultation on its draft Guidance

on Age-Verification Arrangements and its draft Guidance on Ancillary Service Providers. The deadline for responses is the 23 April 2018 . We will consider and publish responses before submitting final versions of the

Guidance to the Secretary of State for approval. The Secretary of State is then required to lay the Guidance in parliament for formal approval. We support the government's decision to allow a period of up to three months after the Guidance is formally

approved before the law comes into force, in order to give industry sufficient time to comply with the legislation. Draft Guidance on Age-verification Arrangements Under section 25 of the Digital

Economy Act 2017, the BBFC is required to publish: "guidance about the types of arrangements for making pornographic material available that the regulator will treat as complying with section 14(1)".

The draft Guidance on Age-Verification Arrangements sets out the criteria by which the BBFC will assess that a person has met with the requirements of section 14(1) of the Act. The draft guidance outlines good practice, such as

offering choice of age-verification solutions to consumers. It also includes information about the requirements that age-verification services and online pornography providers must adhere to under data protection legislation and the role and functions of

the Information Commissioner's Office (ICO). The draft guidance also sets out the BBFC's approach and powers in relation to online commercial pornographic services and considerations in terms of enforcement action. Draft

Guidance on Ancillary Service Providers Under section 25 of the Digital Economy Act 2017, the BBFC is required to publish: "guidance for the purposes of section 21(1) and (5) about the circumstances in which it will treat

services provided in the course of a business as enabling or facilitating the making available of pornographic material or extreme pornographic material". The draft Guidance on Ancillary Service Providers includes a

non-exhaustive list of classes of ancillary service provider that the BBFC will consider notifying under section 21 of the Act, such as social media and search engines. The draft guidance also sets out the BBFC's approach and powers in relation to online

commercial pornographic services and considerations in terms of enforcement action. How to respond to the consultation We welcome views on the draft Guidance in particular in relation to the

following questions: Guidance on Age-Verification Arrangements

Do you agree with the BBFC's Approach as set out in Chapter 2? Do you agree with the BBFC's Age-verification Standards set out in Chapter 3? Do you have any comments with

regards to Chapter 4?

The BBFC will refer any comments regarding Chapter 4 to the Information Commissioner's Office for further consideration. Draft Guidance on Ancillary Service Providers

Please submit all responses (making reference to specific sections of the guidance where relevant) and confidentiality forms as email attachments to: DEA-consultation@bbfc.co.uk The

deadline for responses is 23 April 2018 . We will consider and publish responses before submitting final versions of the Guidance to the Secretary of State for approval. Update: Intentionally

scary 31st March 2018. From Wake Me Up In Dreamland on twitter.com The failure to ensure data privacy/ protection in the Age Ver legislation is wholely intentional. Its intended to scare people away from adult material as a

precursor to even more web censorship in UK. |

| |

Scottish court convicts YouTuber Count Dankula over Nazi dog joke

|

|

|

|

25th March 2018

|

|

| 22nd March 2018. See article from bbc.com

See

article from standard.co.uk

See

Youtube video: M8 Yur Dogs A Nazi from YouTube |

A man who filmed a pet dog giving Nazi salutes before putting the footage on YouTube has been convicted of committing a hate crime. Mark Meechan recorded his girlfriend's pug, Buddha, responding to statements such as gas the Jews and Sieg Heil by

raising its paw. It is interesting to note that the British press carefully avoided informing readers of Meechan's now well known Youtube name, Count Dankula. The original clip had been viewed more than three million times on YouTube. It is

still online on Youtube albeit in restricted mode where it is not included in searches and comments are not accepted. Meechan went on trial at Airdrie Sheriff Court where he denied any wrong doing. He insisted he made the video, which was posted

in April 2016, to annoy his girlfriend Suzanne Kelly, 29. But Sheriff Derek O'Carroll found him guilty of a charge under the Communications Act that he posted a video on social media and YouTube which O'Carroll claimed to be grossly offensive

because it was anti-semitic and racist in nature and was aggravated by religious prejudice. Meechan will be sentenced on 23rd April but has hinted in social media that court officials are looking into some sort of home arrest option. Comedian Ricky Gervais took to Twitter to comment on the case after the verdict. He tweeted:

A man has been convicted in a UK court of making a joke that was deemed 'grossly offensive'. If you don't believe in a person's right to say things that you might find 'grossly offensive', then you don't believe in

Freedom of Speech.

Yorkshire MP Philip Davies has demanded a debate on freedom of speech. Speaking in the House of Commons, hesaid: We guard our freedom of speech in this House very dearly

indeed...but we don't often allow our constituents the same freedoms. Can we have a debate about freedom of speech in this country - something this country has long held dear and is in danger of throwing away needlessly?

Andrea

Leadsom, leader of the Commons, responded that there are limits to free speech: I absolutely commend (Mr Davies) for raising this very important issue. We do of course fully support free speech ...HOWEVER...

there are limits to it and he will be aware there are laws around what you are allowed to say and I don't know the circumstances of his specific point, but he may well wish to seek an adjournment debate to take this up directly with ministers.

Comment: Freedom of expression includes the right to offend

See article from indexoncensorship.org Index on Censorship condemns the decision by a

Scottish court to convict a comedian of a hate crime for teaching his girlfriend's dog a Nazi salute. Mark Meechan, known as Count Dankula, was found guilty on Tuesday of being grossly offensive, under the UK's Communications Act

of 2003. Meechan could be sentenced with up to six months in prison and be required to pay a fine. Index disagrees fundamentally with the ruling by the Scottish Sheriff Appeals Court. According to the Daily Record, Judge Derek

O'Carroll ruled: The description of the video as humorous is no magic wand. This court has taken the freedom of expression into consideration. But the right to freedom of expression also comes with responsibility. Defending everyone's right to free

speech must include defending the rights of those who say things we find shocking or offensive Index on Censorship chief executive Jodie Ginsberg said: Numerous rulings by British and European courts have affirmed that freedom of

expression includes the right to offend. Defending everyone's right to free speech must include defending the rights of those who say things we find shocking or offensive. Otherwise the freedom is meaningless. One of the most

noted judgements is from a 1976 European Court of Human Rights case, Handyside v. United Kingdom, which found: Freedom of expression206is applicable not only to 'information' or 'ideas' that are favourably received or regarded as inoffensive or as a

matter of indifference, but also to those that offend, shock or disturb the State or any sector of the population.

Video Comment: Count Dankula has been found guilty See video from YouTube by The Britisher A powerful video response to another step in the

decline of British free speech. Offsite Comment : Hate-speech laws help only the powerful 23rd March 2018. See

article from spiked-online.com by Alexander Adams

Talking to the press after the judgement, Meechan said today, context and intent were completely disregarded. He explained during the trial that he was not a Nazi and that he had posted the video to annoy his girlfriend. Sheriff Derek O'Carroll declared

the video anti-Semitic and racist in nature. He added that the accused knew that the material was offensive and knew why it was offensive. The original investigation was launched following zero complaints from the public. Offensiveness apparently depends

on the sensitivity of police officers and judges. ...Read the full article from spiked-online.com

Offsite: Giving offence is both inevitable and often necessary in a plural society 25th March 2018. See

article from theguardian.com by Kenan Malik

Even the Guardian can find a space for disquiet about the injustice in the Scottish Count Dankula case. |

| |

|

|

|

|

22nd March 2018

|

|

|

The Register investigates touching on the dark web, smut monopolies and moral outrage See article from theregister.co.uk

|

| |

The Digital Policy Alliance, a group of parliamentarians, waxes lyrical about its document purporting to be a code of practice for age verification, and then charges 90 quid + VAT to read it

|

|

|

| 19th March

2018

|

|

| See article from theregister.co.uk

See

article from shop.bsigroup.com |

The Digital Policy Alliance is a cross party group of parliamentarians with associate members from industry and academia. It has led efforts to develop a Publicly Available Specification (PAS 1296) which was published on 19 March. Crossbench British peer Merlin Hay, the Earl of Erroll, said:

We need to make the UK a safe place to do business, he said. That's why we're producing a British PAS... that set out for the age check providers what they should do and what records they keep.

The

document is expected to include a discussion on the background to age verification, set out the rules in accordance with the Digital Economy Act, and give a detailed look at the technology, with annexes on anonymity and how the system should work.

This PAS will sit alongside data protection and privacy rules set out in the General Data Protection Regulation and in the UK's Data Protection Bill, which is currently making its way through Parliament. Hay explained:

We can't put rules about data protection into the PAS206 That is in the Data Protection Bill, he said. So we refer to them, but we can't mandate them inside this PAS 203 but it's in there as 'you must obey the law'... [perhaps] that's been too subtle for

the organisations that have been trying to take a swing at it.

What Hay didn't mention though was that all of this 'help' for British industry would come with a hefty £ 90 + VAT price tag for a 60

page document. |

| |

But will a porn site with an unadvertised Russian connection follow these laws, or will it pass on IDs and browsing histories straight to the 'dirt digging' department of the KGB?

|

|

|

| 17th March 2018

|

|

| See article from theregister.co.uk

|

The Open Rights Group, Myles Jackman and Pandora Blake have done a magnificent job in highlighting the dangers of mandating that porn companies verify the age of their customers. Worst case scenario In the worst case scenario,

foreign porn companies will demand official ID from porn viewers and then be able to maintain a database of the complete browsing history of those officially identified viewers. And surely much to the alarm of the government and the newly

appointed internet porn censors at the BBFC, then this worst case scenario seems to be the clear favourite to get implemented. In particular Mindgeek, with a near monopoly on free porn tube sites, is taking the lead with its Age ID scheme. Now for

some bizarre reason, the government saw no need for its age verification to offer any specific protection for porn viewers, beyond that offered by existing and upcoming data protection laws. Given some of the things that Google and Facebook do with

personal data then it suggests that these laws are woefully inadequate for the context of porn viewing. For safety and national security reasons, data identifying porn users should be kept under total lock and key, and not used for any commercial

reason whatsoever. A big flaw But there in lies the flaw of the law. The government is mandating that all websites, including those based abroad, should verify their users without specifying any data protection requirements beyond

the law of the land. The flaw is that foreign websites are simply not obliged to respect British data protection laws. So as a topical example, there would be nothing to prevent a Russian porn site (maybe not identifying itself as Russian) from

requiring ID and then passing the ID and subsequent porn browsing history straight over to its dirty tricks department. Anyway the government has made a total pigs ear of the concept with its conservative 'leave it to industry to find a solution'

approach'. The porn industry simply does not have the safety and security of its customers at heart. Perhaps the government should have invested in its own solution first, at least the national security implications may have pushed it into at least

considering user safety and security. Where we are at As mentioned above campaigners have done a fine job in identifying the dangers of the government plan and these have been picked up by practically all newspapers. These seem to

have chimed with readers and the entire idea seems to be accepted as dangerous. In fact I haven't spotted anyone, not even 'the think of the children' charities pushing for 'let's just get on with it'. And so now its over to the authorities to try and

convince people that they have a safe solution somewhere. The Digital Policy Alliance

Perhaps as part of a propaganda campaign to win over the people, parliament's Digital Policy Alliance are just about to publish guidance on age verification policies. The alliance is a cross party group that includes, Merlin Hay, the Earl of Erroll, who

made some good points about privacy concerns whilst the bill was being forced through the House of Lords. He said that a Publicly Available Specification (PAS) numbered 1296 is due to be published on 19 March. This will set out for the age check

providers what they should do and what records they keep. The document is expected to include a discussion on the background to age verification, set out the rules in accordance with the Digital Economy Act, and give a detailed look at the

technology, with annexes on anonymity and how the system should work. However the document will carry no authority and is not set to become an official British standard. He explained: We can't put rules about

data protection into the PAS... That is in the Data Protection Bill, he said. So we refer to them, but we can't mandate them inside this PAS -- but it's in there as 'you must obey the law'...

But of course Hay did not mention that

Russian websites don't have to obey British data protection law. And next the BBFC will have a crack at reducing people's fears Elsewhere in the discussion, Hay suggested the British Board of Film and Internet Censorship could

mandate that each site had to offer more than one age-verification provider, which would give consumers more choice. Next the BBFC will have a crack at minimising people's fears about age verification. It will publish its own guidance document

towards the end of the month, and launch a public consultation about it. |

| |

|

|

|

| 14th March 2018

|

|

|

A commendably negative take from The Sun. A legal expert has revealed the hidden dangers of strict new porn laws, which will force Brits to hand over personal info in exchange for access to XXX videos See

article from thesun.co.uk |

| |

Is it just me or is Matt Hancock just a little too keen to advocate ID checks just for the state to control 'screen time'. Are we sure that such snooping wouldn't be abused for other reasons of state control?

|

|

|

| 13th March 2018

|

|

| See article from alphr.com |

It's no secret the UK government has a vendetta against the internet and social media. Now, Matt Hancock, the secretary of state for Digital, Culture, Media and Sport (DCMS) wants to push that further, and enforce screen time cutoffs for UK children on

Facebook, Instagram and Snapchat. Talking to the Sunday Times, Hancock explained that the negative impacts of social media need to be dealt with, and he laid out his idea for an age-verification system to apply more widely than just porn viewing.

He outlined that age-verification could be handled similarly to film classifications, with sites like YouTube being restricted to those over 18. The worrying thing, however, is his plans to create mandatory screen time cutoffs for all children.

Referencing the porn restrictions he said: People said 'How are you going to police that?' I said if you don't have it, we will take down your website in Britain. The end result is that the big porn sites are introducing this globally, so we are leading

the way. ...Read the full article from alphr.com Advocating internet censorship See

article from gov.uk Whenever politicians peak of 'balance' it inevitably means that the

balance will soon swing from people's rights towards state control. Matt Hancock more or less announced further internet censorship in a speech at the Oxford Media Convention. He said: Our schools and our curriculum have a

valuable role to play so students can tell fact from fiction and think critically about the news that they read and watch. But it is not easy for our children, or indeed for anyone who reads news online. Although we have robust

mechanisms to address disinformation in the broadcast and press industries, this is simply not the case online. Take the example of three different organisations posting a video online. If a broadcaster

published it on their on demand service, the content would be a matter for Ofcom. If a newspaper posted it, it would be a matter for IPSO. If an individual published it online, it would be untouched by

media regulation. Now I am passionate in my belief in a free and open Internet ....BUT... freedom does not mean the freedom to harm others. Freedom can only exist within a framework. Digital

platforms need to step up and play their part in establishing online rules and working for the benefit of the public that uses them. We've seen some positive first steps from Google, Facebook and Twitter recently, but even tech

companies recognise that more needs to be done. We are looking at the legal liability that social media companies have for the content shared on their sites. Because it's a fact on the web that online platforms are no longer just

passive hosts. But this is not simply about applying publisher or broadcaster standards of liability to online platforms. There are those who argue that every word on every platform should be the full legal

responsibility of the platform. But then how could anyone ever let me post anything, even though I'm an extremely responsible adult? This is new ground and we are exploring a range of ideas... including

where we can tighten current rules to tackle illegal content online... and where platforms should still qualify for 'host' category protections. We will strike the right balance between addressing issues

with content online and allowing the digital economy to flourish. This is part of the thinking behind our Digital Charter. We will work with publishers, tech companies, civil society and others to establish a new framework...

A change of heart of press censorship It was only a few years ago when the government were all in favour of creating a press censor. However new fears such as Russian interference and fake news has turned the mainstream

press into the champions of trustworthy news. And so previous plans for a press censor have been put on hold. Hancock said in the Oxford speech: Sustaining high quality journalism is a vital public policy goal. The scrutiny, the

accountability, the uncovering of wrongs and the fuelling of debate is mission critical to a healthy democracy. After all, journalists helped bring Stephen Lawrence's killers to justice and have given their lives reporting from

places where many of us would fear to go. And while I've not always enjoyed every article written about me, that's not what it's there for. I tremble at the thought of a media regulated by the state in a

time of malevolent forces in politics. Get this wrong and I fear for the future of our liberal democracy. We must get this right. I want publications to be able to choose their own path, making decisions like how to make the most

out of online advertising and whether to use paywalls. After all, it's your copy, it's your IP. The removal of Google's 'first click free' policy has been a welcome move for the news sector. But I ask the question - if someone is

protecting their intellectual property with a paywall, shouldn't that be promoted, not just neutral in the search algorithm? I've watched the industry grapple with the challenge of how to monetise content online, with different

models of paywalls and subscriptions. Some of these have been successful, and all of them have evolved over time. I've been interested in recent ideas to take this further and develop new subscription models for the industry.

Our job in Government is to provide the framework for a market that works, without state regulation of the press. |

| |

Will it change its name back to the British Board of Film Censors? The Daily Mail seems to think so

|

|

|

| 13th March 2018

|

|

| See article from dailymail.co.uk

|

The Daily Mail today ran the story that the DCMS have decided to take things a little more cautiously about the privacy (and national security) issues of allowing a foreign porn company to take control of databasing people's porn viewing history. Anyway there was nothing new in the story but it was interesting to note the Freudian slip of referring to the BBFC as the

British Board of Film Censorship. My idea would be for the BBFC to rename itself with the more complete title, the British Board of Film and Internet Censorship. |

| |

A few more details from the point of view of British adult websites

|

|

|

| 12th March 2018

|

|

| See article from wired.co.uk |

|

| |

The Government announces a new timetable for the introduction of internet porn censorship, now set to be in force by the end of 2018

|

|

|

| 11th March 2018

|

|

| See press release from gov.uk

|

In a press release the DCMS describes its digital strategy including a delayed introduction of internet porn censorship. The press release states: The Strategy also reflects the Government's ambition to make the internet

safer for children by requiring age verification for access to commercial pornographic websites in the UK. In February, the British Board of Film Classification (BBFC) was formally designated as the age verification regulator. Our

priority is to make the internet safer for children and we believe this is best achieved by taking time to get the implementation of the policy right. We will therefore allow time for the BBFC as regulator to undertake a public consultation on its draft

guidance which will be launched later this month. For the public and the industry to prepare for and comply with age verification, the Government will also ensure a period of up to three months after the BBFC guidance has been

cleared by Parliament before the law comes into force. It is anticipated age verification will be enforceable by the end of the year.

|

| |

|

|

|

|

11th March 2018

|

|

|

The BBFC takes its first steps to explain how it will stop people from watching internet porn See article from bbfc.co.uk |

| |

|

|

|

| 9th March 2018

|

|

|

Age verification providers say that a single login will be enough, but for those that use browser incognito mode, repeated logins will be required See

article from trustedreviews.com |

| |

So how come the BBFC are saying virtually nothing about internet porn censorship and seem happy for newspapers to point out the incredibly dangerous privacy concerns of letting porn websites hold browsing records

|

|

|

| 7th March 2018

|

|

| See article from bbc.com |

The BBC seems to have done a good job voicing the privacy concerns of the Open Rights Group as the article has been picked up by most of the British rpess, The Open Rights Group says it fears a data breach is inevitable as the deadline approaches

for a controversial change in the way people in the UK access online pornography. Myles Jackman, legal director of the Open Rights Group, said while MindGeek had said it would not hold or store data, it was not clear who would - and by signing in

people would be revealing their sexual preferences. If the age verification process continues in its current fashion, it's a once-in-a-lifetime treasure trove of private information, he said. If it gets hacked,

can British citizens ever trust the government again with their data? The big issues here are privacy and security.

Jackman said it would drive more people to use virtual private networks (VPNs) - which mask a

device's geographical location to circumvent local restrictions - or the anonymous web browser Tor. He commented: It is brutally ironic that when the government is trying to break all encryption in order to combat

extremism, it is now forcing people to turn towards the dark web.

MindGeek, which runs sites including PornHub, YouPorn and RedTube, said its AgeID age verification tool had been in use in Germany since 2015. It said its software

would use third-party age-verification companies to authenticate the age of those signing in. AgeID spokesman James Clark told the BBC there were multiple verification methods that could be used - including credit card, mobile SMS, passport and

driving licence - but that it was not yet clear which would be compliant with the law. For something that is supposed to be coming in April, and requires software update by websites, it is surely about time that the government and/or the BBFC

actually told people about the detailed rules for when age verification is required and what methods will be acceptable to the censors. The start date has not actually been confirmed yet and the BBFC haven't even acknowledged that they have

accepted the job as the UK porn censor.. The BBFC boss David Austin, spouted some nonsense to the BBC claiming that age verification was already in place for other services, including some video-on-demand sites. In fact 'other' services such as

gambling sites have got totally different privacy issues and aren't really relevant to porn. The only method in place so far is to demand credit cards to access porn, the only thing that this has proved is that it is totally unviable for the businesses

involved, and is hardy relevant to how the dominant tube sites work. In fact a total absence of input from the BBFC is already leading to some alarming takes on the privacy issues of handing over people's porn viewing records to porn companies.

Surely the BBFC would be expected to provide official state propaganda trying to convince the worried masses that they have noting fear and that porn websites have people's best interests at heart. For instance, the Telegraph follow-up report writes

(See article from telegraph.co.uk :

Incoming age verification checks for people who watch pornography online are at risk of their sexual tastes being exposed, a privacy expert has warned. The Government has given the all clear for one of the largest pornography companies to organise

the arrangements for verification but experts claim that handing this power to the porn industry could put more people at risk. Those viewing porn will no longer be anonymous and their sexual tastes may be easily revealed through a cache of the

websites they have visited, according to Jim Killock, director of Open Rights Group. He warned: These are the most sensitive, embarrassing viewing habits that have potentially life-changing consequences if they become

public. In order for it to work, the company will end up with a list of every webpage of all of the big pornographic products someone has visited. Just like Google and Facebook, companies want to profile you and send you

advertisements based on what you are searching for. So what are AgeID going to do now that they have been given unparalleled access to people's pornographic tastes? They are going to decide what people's sexual tastes are and the

logic of that is impossible to resist. Even if they give reassurances, I just cannot see why they wouldn't. A database with someone's sexual preferences , highlighted by the web pages visited and geographically traceable through

the IP address, would be a target for hackers who could use them for blackmail or simply to cause humiliation. Imagine if you are a teacher and the pornography that you looked at - completely legally - became public? It would be

devastating for someone's career.

|

| |

|

|

|

| 5th

March 2018

|

|

|

Torrent Freak surveys VPN providers that may be useful when UK internet porn censorship starts See article from torrentfreak.com

|

| |

The BBC notes that it is only few weeks until age verification is required for porn sites yet neither the government nor the BBFC has been able to provide details to the BBC about how it will work.

|

|

|

| 28th

February 2018

|

|

| See article from bbc.com |

The BBC writes: A few weeks before a major change to the way in which UK viewers access online pornography, neither the government nor the appointed regulator has been able to provide details to the BBC about how it will work.

From April 2018, people accessing porn sites will have to prove they are aged 18 or over. Both bodies said more information would be available soon. The British Board of Film

Classification (BBFC) was named by parliament as the regulator in December 2017. (But wasn't actually appointed until 21st February 2018. However the BBFC has been working on its censorship procedures for many months already but has refused to speak

about this until formally appointed). The porn industry has been left to develop its own age verification tools. Prof Alan Woodward, cybersecurity expert at Surrey University, told the BBC this presented

porn sites with a dilemma - needing to comply with the regulation but not wanting to make it difficult for their customers to access content. I can't imagine many porn-site visitors will be happy uploading copies of passports and driving licences to such

a site. And, the site operators know that. |

| |

|

|

|

| 25th February 2018

|

|

|

Age verification of all pornographic content will be mandatory from April 2018. But there are still a lot of grey areas See article from wired.co.uk

|

| |

|

|

|

| 24th February 2018

|

|

|

And why the adult industry is worried. By Lux Alptraum See article from theverge.com |

| |

The government has now officially appointed the BBFC as its internet porn censor

|

|

|

| 23rd February 2018

|

|

| See letter [pdf] from gov.uk

|

The DCMS has published a letter dated 21st February 2018 that officially appoints the BBFC as its internet porn censor. It euphemistically describes the role as an age verification regulator. Presumably a few press releases will follow and now the

BBFC can at least be expected to comment on how the censorship will be implemented.. The enforcement has previously being noted as starting around late April or early May but this does not seem to give sufficient time for the required software to

be implemented by websites. |

| |

|

|

|

| 20th February

2018

|

|

|

What the new regulations mean for your US business See article from avn.com |

| |

Now when they are all set to go, the Daily Mail decides that porn sites are not fit and proper companies to be trusted with implementing the checks

|

|

|

| 18th February 2018

|

|

| See article from dailymail.co.uk

|

As the introduction of age verification for websites approaches, it seems that the most likely outcome is that Mindgeek, the company behind most of the tube sites, is set to become the self appointed gatekeeper of porn. Its near monopoly on free porn

means that it will be the first port of call for people wanting access, and people who register with them will be able to surf large numbers of porn sites with no verification hassle. And they are then not going to be very willing go through all the

hassle again for a company not enrolled in the Mindgeek scheme. Mindgeek is therefore set to become the Amazon,eBay/Google/Facebook of porn. There is another very promising age verification system AVSecure, that sounds way better than Midgeek's AgeID.

AVSecure plans to offer age verification passes from supermarkets and post offices. They will give you a card with a code that requires no further identification whatsoever beyond looking obviously over 25. 18-25 yea olds will have to show ID but

it need not be recorded in the system, Adult websites can then check the verification code that will reveal only that the holder is over 18. All website interactions will be further protected by blockchain encryption. The Mindgeek scheme is the

most well promoted for the moment and so is seem as the favourite to prevail. TheDaily Mail is now having doubts about the merits of trusting a porn company with age verification on the grounds that the primary motivation is to make money. The Daily Mail

has also spotted that the vast swathes of worldwide porn is nominally held to be illegal by the government under the Obscene Publications Act. Notably female ejaculation is held to be obscene as the government claims it to be illegal because the

ejaculate contains urine. I think the government is on a hiding to nothing if it persists in its silly claims of obscenity, they are simply years out of date and the world has move on. Anyway the Daily Mail spouts: The

moguls behind the world's biggest pornography websites have been entrusted by the Government with policing the internet to keep it safe for children. MindGeek staff have held a series of meetings with officials in preparation for the new age verification

system which is designed to ensure that under-18s cannot view adult material. Tens of millions of British adults are expected to have to entrust their private details to MindGeek, which owns the PornHub and YouPorn websites.

Critics have likened the company's involvement to entrusting the cigarette industry with stopping underage smoking and want an independent body to create the system instead. A Mail on Sunday investigation has

found that material on the company's porn websites could be in breach of the Obscene Publications Act. A search for one sexual act, which would be considered illegal to publish videos of under the Obscene Publications Act, returned nearly 20,000 hits on

PornHub. The Mail on Sunday did not watch any of the videos. Shadow Culture Minister Liam Byrne said: It is alarming that a company given the job of checking whether viewers of pornography are

over 18 can't even police publication of illegal material on its own platform.

A DCMS spokesman said: The Government will not be endorsing individual age-verification solutions but

they will need to abide by data protection laws to be compliant.

|

| |

|

|

|

| 18th

February 2018

|

|

|

Sky UK block gay-teen support website deemed pornographic by its algorithms See article from gaystarnews.com

|

| |

YouTube videos that are banned for UK eyes only

|

|

|

| 17th February 2018

|

|

| See article from vpncompare.co.uk |

The United Kingdom's reputation for online freedom has suffered significantly in recent years, in no small part due to the draconian Investigatory Powers Act, which came into power last year and created what many people have described

as the worst surveillance state in the free world. But despite this, the widely held perception is that the UK still allows relatively free access to the internet, even if they do insist on keeping records on what sites you are

visiting. But how true, is this perception? ... There is undeniably more online censorship in the UK than many people would like to admit to. But is this just the tip of the iceberg? The censorship of one

YouTube video suggests that it might just be. The video in question contains footage filmed by a trucker of refugees trying to break into his vehicle in order to get across the English Channel and into the UK. This is a topic which has been widely

reported in British media in the past, but in the wake of the Brexit vote and the removal of the so-called 'Jungle Refugee Camp', there has been strangely little coverage. The video in question is entitled

'Epic Hungarian Trucker runs the Calais migrant gauntlet.' It is nearly 15 minutes long and does feature the drivers

extremely forthright opinions about the refugees in question as well as some fairly blue language. Yet, if you try to access this video in the UK, you will find that it is blocked. It remains accessible to users elsewhere in the

world, albeit with content warnings in place. And it is not alone. It doesn't take too much research to uncover several similar videos which are also censored in the UK. The scale of the issue likely requires further research. But

it safe to say, that such censorship is both unnecessary and potentially illegal as it as undeniably denying British citizens access to content which would feed an informed debate on some crucial issues. |

| |

The UK reveals a tool to detect uploads of jihadi videos

|

|

|

|

15th February 2018

|

|

| 13th February 2018. See article from bbc.com |

The UK government has unveiled a tool it says can accurately detect jihadist content and block it from being viewed. Home Secretary Amber Rudd told the BBC she would not rule out forcing technology companies to use it by law. Rudd is visiting the

US to meet tech companies to discuss the idea, as well as other efforts to tackle extremism. The government provided £600,000 of public funds towards the creation of the tool by an artificial intelligence company based in London. Thousands

of hours of content posted by the Islamic State group was run past the tool, in order to train it to automatically spot extremist material. ASI Data Science said the software can be configured to detect 94% of IS video uploads. Anything the

software identifies as potential IS material would be flagged up for a human decision to be taken. The company said it typically flagged 0.005% of non-IS video uploads. But this figure is meaningless without an indication of how many contained any

content that have any connection with jihadis. In London, reporters were given an off-the-record briefing detailing how ASI's software worked, but were asked not to share its precise methodology. However, in simple terms, it is an algorithm that

draws on characteristics typical of IS and its online activity. It sounds like the tool is more about analysing data about the uploading account, geographical origin, time of day, name of poster etc rather than analysing the video itself.

Comment: Even extremist takedowns require accountability 15th February 2018. See article from openrightsgroup.org

Can extremist material be identified at 99.99% certainty as Amber Rudd claims today? And how does she intend to ensure that there is legal accountability for content removal? The Government is very keen to ensure that

extremist material is removed from private platforms, like Facebook, Twitter and Youtube. It has urged use of machine learning and algorithmic identification by the companies, and threatened fines for failing to remove content swiftly.

Today Amber Rudd claims to have developed a tool to identify extremist content, based on a database of known material. Such tools can have a role to play in identifying unwanted material, but we need to understand that there are some

important caveats to what these tools are doing, with implications about how they are used, particularly around accountability. We list these below. Before we proceed, we should also recognise that this is often about computers

(bots) posting vast volumes of material with a very small audience. Amber Rudd's new machine may then potentially clean some of it up. It is in many ways a propaganda battle between extremists claiming to be internet savvy and exaggerating their impact,

while our own government claims that they are going to clean up the internet. Both sides benefit from the apparent conflict. The real world impact of all this activity may not be as great as is being claimed. We should be given

much more information about what exactly is being posted and removed. For instance the UK police remove over 100,000 pieces of extremist content by notice to companies: we currently get just this headline figure only. We know nothing more about these

takedowns. They might have never been viewed, except by the police, or they might have been very influential. The results of the government's' campaign to remove extremist material may be to push them towards more private or

censor-proof platforms. That may impact the ability of the authorities to surveil criminals and to remove material in the future. We may regret chasing extremists off major platforms, where their activities are in full view and easily used to identify

activity and actors. Whatever the wisdom of proceeding down this path, we need to be worried about the unwanted consequences of machine takedowns. Firstly, we are pushing companies to be the judges of legal and illegal. Secondly,

all systems make mistakes and require accountability for them; mistakes need to be minimised, but also rectified. Here is our list of questions that need to be resolved. 1 What really is the accuracy of

this system? Small error rates translate into very large numbers of errors at scale. We see this with more general internet filters in the UK, where our blocked.org.uk project regularly uncovers and reports errors.

How are the accuracy rates determined? Is there any external review of its decisions? The government appears to recognise the technology has limitations. In order to claim a high accuracy rate, they say at

least 6% of extremist video content has to be missed. On large platforms that would be a great deal of material needing human review. The government's own tool shows the limitations of their prior demands that technology "solve" this problem.

Islamic extremists are operating rather like spammers when they post their material. Just like spammers, their techniques change to avoid filtering. The system will need constant updating to keep a given level of accuracy.

2 Machines are not determining meaning Machines can only attempt to pattern match, with the assumption that content and form imply purpose and meaning. This explains how errors can occur, particularly in

missing new material. 3 Context is everything The same content can, in different circumstances, be legal or illegal. The law defines extremist material as promoting or glorifying terrorism. This is a

vague concept. The same underlying material, with small changes, can become news, satire or commentary. Machines cannot easily determine the difference. 4 The learning is only as good as the underlying material

The underlying database is used to train machines to pattern match. Therefore the quality of the initial database is very important. It is unclear how the material in the database has been deemed illegal, but it is likely that these

are police determinations rather than legal ones, meaning that inaccuracies or biases in police assumptions will be repeated in any machine learning. 5 Machines are making no legal judgment The

machines are not making a legal determination. This means a company's decision to act on what the machine says is absent of clear knowledge. At the very least, if material is "machine determined" to be illegal, the poster, and users who attempt

to see the material, need to be told that a machine determination has been made. 6 Humans and courts need to be able to review complaints Anyone who posts material must be able to get human review,

and recourse to courts if necessary. 7 Whose decision is this exactly? The government wants small companies to use the database to identify and remove material. If material is incorrectly removed,

perhaps appealed, who is responsible for reviewing any mistake? It may be too complicated for the small company. Since it is the database product making the mistake, the designers need to act to correct it so that it is less

likely to be repeated elsewhere. If the government want people to use their tool, there is a strong case that the government should review mistakes and ensure that there is an independent appeals process.

8 How do we know about errors? Any takedown system tends towards overzealous takedowns. We hope the identification system is built for accuracy and prefers to miss material rather than remove the wrong things, however

errors will often go unreported. There are strong incentives for legitimate posters of news, commentary, or satire to simply accept the removal of their content. To complain about a takedown would take serious nerve, given that you risk being flagged as

a terrorist sympathiser, or perhaps having to enter formal legal proceedings. We need a much stronger conversation about the accountability of these systems. So far, in every context, this is a question the government has

ignored. If this is a fight for the rule of law and against tyranny, then we must not create arbitrary, unaccountable, extra-legal censorship systems.

|

| |

|

|

|

| 12th February

2018

|

|

|

Katie Price petitions parliament to make internet insults a criminal offence, with the line being drawn somewhere between banter and criminal abuse. And to keep a register of offenders See

article from dailymail.co.uk |

| |

Government outlines next steps to make the UK the most censored place to be online

|

|

|

| 7th February 2018

|

|

| See press release from gov.uk

|

Government outlines next steps to make the UK the safest place to be online The Prime Minister has announced plans to review laws and make sure that what is illegal offline is illegal online as the Government marks Safer

Internet Day. The Law Commission will launch a review of current legislation on offensive online communications to ensure that laws are up to date with technology. As set out in the

Internet Safety Strategy Green Paper , the Government is clear that abusive and threatening behaviour online is totally

unacceptable. This work will determine whether laws are effective enough in ensuring parity between the treatment of offensive behaviour that happens offline and online. The Prime Minister has also announced:

That the Government will introduce a comprehensive new social media code of practice this year, setting out clearly the minimum expectations on social media companies The introduction of an annual

internet safety transparency report - providing UK data on offensive online content and what action is being taken to remove it.

Other announcements made today by Secretary of State for Digital, Culture, Media and Sport (DCMS) Matt Hancock include:

A new online safety guide for those working with children, including school leaders and teachers, to

prepare young people for digital life A commitment from major online platforms including Google, Facebook and Twitter to put in place specific support during election campaigns to ensure abusive content can be dealt with

quickly -- and that they will provide advice and guidance to Parliamentary candidates on how to remain safe and secure online

DCMS Secretary of State Matt Hancock said: We want to make the UK the safest place in the world to be online and having listened to the views of parents, communities and industry, we are delivering

on the ambitions set out in our Internet Safety Strategy.

Not only are we seeing if the law needs updating to better tackle online harms, we are moving forward with our plans for online platforms to have

tailored protections in place - giving the UK public standards of internet safety unparalleled anywhere else in the world.

Law Commissioner Professor David Ormerod QC said: There

are laws in place to stop abuse but we've moved on from the age of green ink and poison pens. The digital world throws up new questions and we need to make sure that the law is robust and flexible enough to answer them.

If we are to be safe both on and off line, the criminal law must offer appropriate protection in both spaces. By studying the law and identifying any problems we can give government the full picture as it works to make the UK the

safest place to be online. The latest announcements follow the publication of the Government's

Internet Safety Strategy Green Paper last year which outlined plans for a social media code of practice. The aim is to prevent abusive behaviour online, introduce more effective reporting mechanisms to tackle bullying or harmful content, and give

better guidance for users to identify and report illegal content. The Government will be outlining further steps on the strategy, including more detail on the code of practice and transparency reports, in the spring. To support

this work, people working with children including teachers and school leaders will be given a new guide for online safety, to help educate young people in safe internet use. Developed by the UK Council for Child Internet Safety (

UKCCIS , the toolkit describes the knowledge and skills for staying safe online that children and young people should have at

different stages of their lives. Major online platforms including Google, Facebook and Twitter have also agreed to take forward a recommendation from the Committee on Standards in Public Life (CSPL) to provide specific support for

Parliamentary candidates so that they can remain safe and secure while on these sites. during election campaigns. These are important steps in safeguarding the free and open elections which are a key part of our democracy. Notes

Included in the Law Commission's scope for their review will be the Malicious Communications Act and the Communications Act. It will consider whether difficult concepts need to be reconsidered in the light of technological

change - for example, whether the definition of who a 'sender' is needs to be updated. The Government will bring forward an Annual Internet Safety Transparency report, as proposed in our Internet Safety Strategy green paper. The

reporting will show:

the amount of harmful content reported to companies the volume and proportion of this material that is taken down how social media companies are handling and responding to

complaints how each online platform moderates harmful and abusive behaviour and the policies they have in place to tackle it.

Annual reporting will help to set baselines against which to benchmark companies' progress, and encourage the sharing of best practice between companies. The new social media code of practice will outline

standards and norms expected from online platforms. It will cover:

The development, enforcement and review of robust community guidelines for the content uploaded by users and their conduct online The prevention of abusive behaviour online and the misuse of social

media platforms -- including action to identify and stop users who are persistently abusing services The reporting mechanisms that companies have in place for inappropriate, bullying and harmful content, and ensuring they

have clear policies and performance metrics for taking this content down The guidance social media companies offer to help users identify illegal content and contact online, and advise them on how to report it to the

authorities, to ensure this is as clear as possible The policies and practices companies apply around privacy issues.

Comment: Preventing protest 7th February 2018. See article from indexoncensorship.org

The UK Prime Minister's proposals for possible new laws to stop intimidation against politicians have the potential to prevent legal protests and free speech that are at the core of our democracy, says Index on Censorship. One hundred years after the

suffragette demonstrations won the right for women to have the vote for the first time, a law that potentially silences angry voices calling for change would be a retrograde step. No one should be threatened with violence, or

subjected to violence, for doing their job, said Index chief executive Jodie Ginsberg. However, the UK already has a host of laws dealing with harassment of individuals both off and online that cover the kind of abuse politicians receive on social media

and elsewhere. A loosely defined offence of 'intimidation' could cover a raft of perfectly legitimate criticism of political candidates and politicians -- including public protest.

|

| |

Houses of Commons and Lords approve the appointment of the BBFC as the UK's internet porn censor

|

|

|

| 2nd February 2018

|

|

| See article from

hansard.parliament.uk |

House of Commons Delegated Legislation Committee Proposal for Designation of Age-verification Regulator Thursday 1 February 2018 The Minister of State, Department for Digital, Culture, Media and Sport (Margot James)

I beg to move, That the Committee has considered the Proposal for Designation of Age-verification Regulator. The Digital Economy Act 2017

introduced a requirement for commercial providers of online pornography to have robust age-verification controls in place to prevent children and young people under the age of 18 from accessing pornographic material. Section 16 of the Act states that the

Secretary of State may designate by notice the age-verification regulator and may specify which functions under the Act the age-verification regulator should hold. The debate will focus on two issues. I am seeking Parliament's approval to designate the

British Board of Film Classification as the age-verification regulator and approval for the BBFC to hold in this role specific functions under the Act.

Liam Byrne (Birmingham, Hodge Hill) (Lab)

At this stage, I would normally preface my remarks with a lacerating attack on how the Government are acquiescing in our place in the world as a cyber also-ran, and I would attack them for their rather desultory position and

attitude to delivering a world-class digital trust regime. However, I am very fortunate that this morning the Secretary of State has made the arguments for me. This morning, before the Minister arrived, the Secretary of State launched his new app, Matt

Hancock MP. It does not require email verification, so people are already posting hardcore pornography on it. When the Minister winds up, she might just tell us whether the age-verification regulator that she has proposed, and that we will approve this

morning, will oversee the app of the Secretary of State as well.

Question put and agreed to. House of Lords See

article from hansard.parliament.uk

Particulars of Proposed Designation of Age-Verification Regulator 01 February 2018 Motion to Approve moved by Lord Ashton of Hyde Section 16 of the Digital Economy Act states that the Secretary of

State may designate by notice the age-verification regulator, and may specify which functions under the Act the age-verification regulator should hold. I am therefore seeking this House's approval to designate the British Board of Film Classification as

the age-verification regulator. We believe that the BBFC is best placed to carry out this important role, because it has unparalleled expertise in this area.

Lord Stevenson of Balmacara (Lab)

I still argue, and I will continue to argue, that it is not appropriate for the Government to give statutory powers to a body that is essentially a private company. The BBFC is, as I have said before204I do not want to go into any

detail -- a company limited by guarantee. It is therefore a profit-seeking organisation. It is not a charity or body that is there for the public good. It was set up purely as a protectionist measure to try to make sure that people responsible for

producing films that were covered by a licensing regime in local authorities that was aggressive towards certain types of films204it was variable and therefore not good for business204could be protected by a system that was largely undertaken

voluntarily. It was run by the motion picture production industry for itself.

L ord Ashton of Hyde I will just say that the BBFC is set up as an

independent non-governmental body with a corporate structure, but it is a not-for-profit corporate structure. We have agreed funding arrangements for the BBFC for the purposes of the age-verification regulator. The funding is ring-fenced for this

function. We have agreed a set-up cost of just under £1 million and a running cost of £800,000 for the first year. No other sources of funding will be required to carry out this work, so there is absolutely no question of influence from industry

organisations, as there is for its existing work—it will be ring-fenced.

Motion agreed.

|

| |

|

|

|

|

2nd February 2018

|

|

|

XBiz publishes an interesting technical report on how the various age verification tools will work See article from xbiz.com

|

| |

The Daily Mail does its bit on Porn Hub's AgeID scheme that will require porn viewers to enter personal details, which are then checked out by the government, and then to ask customers to believe that their details won't be stored

|

|

|

| 1st February 2018

|

|

| See article from dailymail.co.uk

See

article from gizmodo.co.uk |

The Daily Mail explains Online ID checks will require viewers prove they are 18 before viewing any porn online, as part of the Digital Economy Act 2018. Users will need to make their AgeID account

using a passport or mobile phone to confirm their age. The information will then be passed to a government-approved service to confirm the user is over 18.

A MindGeek spokesman claimed

We do not store any personal data entered during the age-verification process. But Gizmodo is sceptical of this because AgeID privacy policy small print reads that it may be used:

to develop and display content and advertising tailored to your interests on our Website and other sites. It also states: We also may use these technologies to collect information about your online activities over time and across

third-party websites or other online services. So basically MindGeek has the option of tracking your porn habits and your general non-porn browsing so it can sell you better ads. |

| |

Broadband Genie survey reveals that only 11 to 20% of people will be happy providing identity data for age verification purposes

|

|

|

|

31st January 2018

|

|

| See article from broadbandgenie.co.uk

|

Although a majority are in favour of verifying age, it seems far fewer people in our survey would be happy to actually go through verification themselves. Only 19% said they'd be comfortable sharing information directly with an adult site, and just 11%

would be comfortable handing details to a third party. ... Read the full article from broadbandgenie.co.uk

|

| |

|

|

|

| 25th January 2018

|

|

|

It is clear that the BBFC are set to censor porn websites but what about the grey area of non-porn websites about porn and sex work. The BBFC falsely claim they don't know yet as they haven't begun work on their guidelines See

article from sexandcensorship.org |

| |

The government publishes it guidance to the new UK porn censor about notifying websites that they are to be censored, asking payment providers and advertisers to end their service, recourse to ISP blocks and an appeals process

|

|

|

| 22nd

January 2018

|

|

| See

Guidance from the Secretary of State for Digital, Culture, Media and Sport to the Age-Verification Regulator for Online Pornography [pdf] from gov.uk |

A few extracts from the document Introduction

- A person contravenes Part 3 of the Digital Economy Act 2017 if they make

pornographic material available on the internet on a commercial basis to

persons in the United Kingdom without ensuring that the material is not

normally accessible to persons under the age of 18. Contravention could lead

to a range of measures being taken by the age-verification regulator in

relation to that person, including blocking by internet service providers (ISPs).

- Part 3 also gives the age-verification regulator powers to act where a person

makes extreme pornographic material (as defined in section 22 of the Digital

Economy Act 2017) available on the internet to persons in the

United

Kingdom.

Purpose This guidance has been written to provide the framework for the operation of

the age-verification regulatory regime in the following areas:

● Regulator's approach to the exercise of its powers;

● Age-verification arrangements;

● Appeals;

● Payment-services Providers and Ancillary Service Providers;

● Internet Service

Provider blocking; and

● Reporting. Enforcement principles This guidance balances two overarching principles in the regulator's application of its powers under

sections 19, 21 and 23 - that it should apply its powers in the way which it thinks will be most effective in ensuring compliance on a case-by-case basis and that it should take a proportionate approach. As set out in

this guidance, it is expected that the regulator, in taking a proportionate approach, will first seek to engage with the non-compliant person to encourage them to comply, before considering issuing a notice under section 19, 21 or 23, unless there are

reasons as to why the regulator does not think that is appropriate in a given case Regulator's approach to the exercise of its powers The age-verification consultation Child

Safety Online: Age verification for pornography identified that an extremely large number of websites contain pornographic content - circa 5 million sites or parts of sites. All providers of online pornography, who are making available pornographic

material to persons in the United Kingdom on a commercial basis, will be required to comply with the age-verification requirement . In exercising its powers, the regulator should take a proportionate approach. Section

26(1) specifically provides that the regulator may, if it thinks fit, choose to exercise its powers principally in relation to persons who, in the age-verification regulator's opinion:

- (a) make pornographic material or extreme pornographic material available on the internet on a commercial basis to a large number of persons, or a large number of persons under the age of 18, in the United Kingdom; or

- (b) generate a large amount of turnover by doing so.

In taking a proportionate approach, the regulator should have regard to the following: a. As set out in section 19, before making a determination that a person is contravening section 14(1),

the regulator must allow that person an opportunity to make representations about why the determination should not be made. To ensure clarity and discourage evasion, the regulator should specify a prompt timeframe for compliance and, if it considers it

appropriate, set out the steps that it considers that the person needs to take to comply. b. When considering whether to exercise its powers (whether under section 19, 21 or 23), including considering what type of

notice to issue, the regulator should consider, in any given case, which intervention will be most effective in encouraging compliance, while balancing this against the need to act in a proportionate manner. c. Before

issuing a notice to require internet service providers to block access to material, the regulator must always first consider whether issuing civil proceedings or giving notice to ancillary service providers and payment-services providers might have a

sufficient effect on the non-complying person's behaviour. To help ensure transparency, the regulator should publish on its website details of any notices under sections 19, 21 and 23.

Age-verification arrangements Section 25(1) provides that the regulator must publish guidance about the types of arrangements for making pornographic material available that the regulator

will treat as complying with section 14(1). This guidance is subject to a Parliamentary procedure A person making pornographic material available on a commercial basis to persons in the United Kingdom must have an

effective process in place to verify a user is 18 or over. There are various methods for verifying whether someone is 18 or over (and it is expected that new age-verification technologies will develop over time). As such, the Secretary of State considers

that rather than setting out a closed list of age-verification arrangements, the regulator's guidance should specify the criteria by which it will assess, in any given case, that a person has met with this requirement. The regulator's guidance should

also outline good practice in relation to age verification to encourage consumer choice and the use of mechanisms which confirm age, rather than identity. The regulator is not required to approve individual

age-verification solutions. There are various ways to age verify online and the industry is developing at pace. Providers are innovating and providing choice to consumers. The process of verifying age for adults should

be concerned only with the need to establish that the user is aged 18 or above. The privacy of adult users of pornographic sites should be maintained and the potential for fraud or misuse of personal data should be safeguarded. The key focus of many

age-verification providers is on privacy and specifically providing verification, rather than identification of the individual. Payment-services providers and ancillary service providers

There is no requirement in the Digital Economy Act for payment-services providers or ancillary service providers to take any action on receipt of such a notice. However, Government expects that responsible companies will wish to

withdraw services from those who are in breach of UK legislation by making pornographic material accessible online to children or by making extreme pornographic material available. The regulator should consider on a

case-by-case basis the effectiveness of notifying different ancillary service providers (and payment-services providers). There are a wide-range of providers whose services may be used by pornography providers to

enable or facilitate making pornography available online and who may therefore fall under the definition of ancillary service provider in section 21(5)(a) . Such a service is not limited to where a direct financial relationship is in place between the

service and the pornography provider. Section 21(5)(b) identifies those who advertise commercially on such sites as ancillary service providers. In addition, others include, but are not limited to:

- a. Platforms which enable pornographic content or extreme pornographic material to be uploaded;

- b. Search engines which facilitate access to pornographic content or extreme pornographic

material;

- c. Discussion for a and communities in which users post links;

- d. Cyberlockers' and cloud storage services on which pornographic content or extreme pornographic

material may be stored;

- e. Services including websites and App marketplaces that enable users to download Apps;

- f. Hosting services which enable access to websites, Apps or App

marketplaces; that enable users to download apps

- g. Domain name registrars.

- h. Set-top boxes, mobile applications and other devices that can connect directly to streaming servers

Internet Service Provider blocking The regulator should only issue a notice to an internet service provider having had regard to Chapter 2 of this guidance. The regulator should take a

proportionate approach and consider all actions (Chapter 2.4) before issuing a notice to internet service providers. In determining those ISPs that will be subject to notification, the regulator should take into

consideration the number and the nature of customers, with a focus on suppliers of home and mobile broadband services. The regulator should consider any ISP that promotes its services on the basis of pornography being accessible without age verification

irrespective of other considerations. The regulator should take into account the child safety impact that will be achieved by notifying a supplier with a small number of subscribers and ensure a proportionate approach.