| |

|

|

|

| 31st

March 2018

|

|

|

In Europe, internet platforms are incentivized to take down first, ask questions second. By William Echikson See article

from politico.eu |

| |

|

|

|

| 31st

March 2018

|

|

|

But it is ending up censoring everything and anything to do with sex See article from time.com |

| |

Facebook seems to have blocked an independent research project analysing how the Facebook news feed algorithm works

|

|

|

| 30th March 2018

|

|

| See article from openrightsgroup.org

|

Facebook Tracking Exposed (FTE) is a browser extension which intends to find out - but you won't find it in the chrome store because Facebook have issued a takedown request. Facebook don't want you to know how their algorithm

works. That will hardly be a shock to you or anyone else, but it is a serious problem. The algorithm is what Facebook uses to determine what you, or anyone else around the world, will see. What it chooses to promote or bury has

become increasingly important to our democracy. But Facebook don't want you to know how it works. Facebook Tracking Exposed (FTE) is a browser extension which intends to find out - it lets users compare their timeline posts

against the potential chronological content, helping them to understand why some posts have been promoted, and other haven't. It also allows comparative research, pooling data to help researchers try and reverse engineer the algorithm itself.

So far, so great - but you won't be able to find FTE in the chrome store because Facebook have issued a takedown on the basis on the basis of an alleged trademark infringement. Facebook do not want you to know how their algorithm

works - how it controls the flow of information to billions of people. To pretend the premise of Facebook's trademark claim is reasonable for a second (it's not likely - the Facebook used in the name describes the purpose of the

tool rather than who made it) the question becomes - is it reasonable for Facebook to use this as an excuse to continue to obfuscate their filtering of important information? The answer, as all of the news around Cambridge

Analytica is making clear, is that it absolutely is not. People looking to understand the platform they are using would find it very difficult to find without the Facebook in the name. But then, Facebook don't want you to know how their algorithm works.

This is easy for Facebook to fix, they could revoke their infringement claim, and start taking on some genuine accountability. There is no guarantee that FTE will be able to perfectly reveal the exact workings of the algorithm -

attempts to reverse engineer proprietary algorithms are difficult, and observations will always be partial and difficult to control and validate. That doesn't change the fact that companies like Facebook and Google need to be

transparent about the ways they filter information. The information they do or don't show people can affect opinions, and potentially even sway elections. We are calling on Google to reinstate the application on the Chrome store

and for Facebook to withdraw their request to remove the app. But, then, Facebook don't want you to know how their algorithm works. The equivalent add-on for Firefox is also now unavailable.

|

| |

And a reminder to UK porn viewers, don't believe the BBFC's fake assurances that your data 'should' be kept safe. Companies regularly sell, give away, exploit, lose and get it stolen, and of course, hand it over to the authorities

|

|

|

| 30th March 2018

|

|

| See article from mashable.com |

The athletic apparel company Under Armour has announced a massive data breach affecting at least 150 million users of its food and nutrition app MyFitnessPal. On March 25, the MyFitnessPal team became aware that an unauthorized party acquired data

associated with MyFitnessPal user accounts in late February 2018, reads a press release detailing the breach. The investigation indicates that the affected information included usernames, email addresses, and hashed passwords - the majority with the

hashing function called bcrypt used to secure passwords. There is one other bit of good news: It looks like social security numbers and credit cards were not stolen in the digital heist. |

| |

Amazon hides away erotic books in its latest search algorithm

|

|

|

| 30th March 2018

|

|

| See article from dailymail.co.u

|

Amazon has changed its search algorit to prevent erotic books from surfacing Unless a user searches for erotic novels. Amazon is trying to make its vast website a bit less NSFW. The internet giant made some sudden changes to the way that erotic

novels surface in its search results. As a result of the update, erotic novels have been filtered out of the results for main categories and many of their best-selling titles have been relegated into nowhere land. The move has angered many erotica

authors who say it could lead to a massive dent in revenue. When a book has been labeled a best-seller, eg Fifty Shades of Grey, it might make the title more likely to appear at the top of search results. Amazon has yet to issue a statement

on the changes to its search results. However, one author said it received a notice from Amazon via email following inquiry. It said: We've re-reviewed your book and confirmed that it contains erotic or sexually

explicit content. We have found that when books are placed in the correct category it increases visibility to customers who are seeking that content. In addition, we are working on improvements to our store

to further improve our search experience for customers.

It is not yet clear whether the search algorithms have been changed as part of US internet censorship requirements recently passed in the FOSTA that nominally required censorship

of content related to sex trafficking but in fact impacts a much wider range of adult content. |

| |

|

|

|

| 30th March 2018

|

|

|

The harvesting of our personal details goes far beyond what many of us could imagine. So I braced myself and had a look. By Dylan Curran See

article from theguardian.com |

| |

So when even the most senior internet figures can't keep our data safe, why does Matt Hancock want to force us to hand over our porn browsing history to the Mindgeek Gang?

|

|

|

| 29th March 2018

|

|

| 20th March 2018. See article from

dailymail.co.uk

See The Cambridge Analytica scandal isn't a

scandal: this is how Facebook worksfrom independent.co.uk |

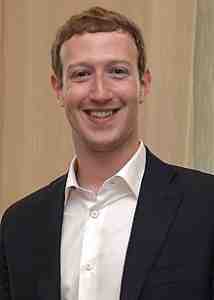

The Culture Secretary has vowed to end the Wild West for tech giants amid anger at claims data from Facebook users was harvested to be used by political campaigns. Matt Hancock warned social media companies that they could be slapped with new

rules and regulations to rein them in. It comes amid fury at claims the Facebook data of around 50 million users was taken without their permission and used by Cambridge Analytica. The firm played a key role in mapping out the behaviour of

voters in the run-up to the 2016 US election and the EU referendum campaign earlier that year. Tory MP Damian Collins, chairman of the Culture select committee, has said he wants to haul Mark Zuckerberg to Parliament to explain himself. Hancock said:

Tech companies store the data of billions of people around the world - giving an unparalleled insight into the lives and thoughts of people. And they must do more to show they are storing the data responsibly.

Update: They'll have to put a price on his head if they want Zuckerberg hauled in front of a judge 28th March 2018. See

article from theguardian.com Mark Zuckerberg has turned down the request

to appear in front of the a UK parliamentary committee for a good grilling. In response to the request, Facebook has suggested one of two executives could speak to parliament: Chris Cox, the company' chief product officer, who is in charge of the

Facebook news feed, or Mike Schroepfer, the chief technology officer, who heads up the developer platform. The Culture select committee chair, Damian Collins said: It is absolutely astonishing that Mark

Zuckerberg is not prepared to submit himself to questioning in front of a parliamentary or congressional hearing, given these are questions of fundamental importance and concern to his users, as well as to this inquiry. I would certainly urge him to

think again if he has any care for people that use his company's services.

Update: Pulling the big data plug 29th March 2018. See

article from reuters.com Facebook said on Wednesday it would end its

partnerships with several large data brokers who help advertisers target people on the social network. Facebook has for years given advertisers the option of targeting their ads based on data collected by companies such as Acxiom Corp and Experian PLC.

Facebook has also adjusted the privacy settings on its service, giving users control over their personal information in fewer taps. This move also reflects new European privacy laws soon to come in force. Update:

Facebook's listening 29th March 2018. See article from

dailymail.co.uk

Christopher Wylie, the whistle blower who revealed lots of interesting stuff about Facebook and Cambridge Analytica, has been speaking to Commons Digital, Culture, Media and Sport Committee about what Facebook gets up to. He told the committee

that he believes the social media giant is able to decipher whether someone is out in a crowd of people, in the office or at home. Asked by Conservative MP Damian Collins whether Facebook can listen to what people are saying to shape their

advertising, Wylie said they use the smartphone app microphone for environmental purposes. My understanding generally of how companies use it... not just Facebook, but generally other apps that pull audio, is for

environmental context. So if, for example, you have a television playing versus if you're in a busy place with a lot of people talking versus a work environment. It's not to say they're listening to what

you're saying. It's not natural language processing. That would be hard to scale.

It is interesting to note that he said companies don't listen into conversations because they can't for the moment. Butt he explanation is phrased such

that they will listen to conversations just as soon as the technology allows. |

| |

US internet companies go into censor everything mode just in case they are held responsible for users using internet services for sex trafficking

|

|

|

|

28th March 2018

|

|

| See article from avn.com

See censorship rules from microsoft.com

See

article from denofgeek.com |

The US has just passed an internet censorship bill, FOSTA, that holds internet companies responsible if users use their services to facilitate sex trafficking. It sounds a laudable aim on paper, but in reality how can say Microsoft actually prevent users

from using communication or storage services to support trafficking? Well the answer is there is no real way to distinguish say adverts for legal sex workers from those for illegal sex workers. So the only answer for internet companies is to censor

and ban ALL communications that could possibly be related to sex. So there have been several responses from internet companies along these lines. Small ad company Craigslist has just taken down ALL personal ads just in case sex traffickers may be

lurking there. A Craigslist spokesperson explained: Any tool or service can be misused. We can't take such risk without jeopardizing all our other services.

Last week, several online porn performers

who use Google Drive to store and distribute their adult content files reported that the service had suddenly and without warning blocked or deleted their files, posing a threat to their income streams. And now it seems that Microsoft is following

suit for users of its internet services in the USA. Microsoft has now banned offensive language, as well as nudity and porn, from any of its services -- which include Microsoft Office, XBox and even Skype. The broad new ban was quietly inserted

into Microsoft's new Terms of Service agreement, which was posted on March 1 and which takes effect on May 1 . The new rules also give Microsoft the legal ability to review private user content and block or delete anything, including email, that contains

offensive content or language. The rules do not define exactly what would constitute offensive language. In theory, the new ban could let Microsoft monitor, for example, private Skype chats, shutting down calls in which either participant is

nude or engaged in sexual conduct. So wait a sec: I can't use Skype to have an adult video call with my girlfriend? I can't use OneDrive to back up a document that says 'fuck' in it? asked civil liberties advocate Jonathan Corbett, in a blog post

this week. If I call someone a mean name in Xbox Live, not only will they cancel my account, but also confiscate any funds I've deposited in my account?

denofgeek.com answers some of these queries: Seemingly aware of the tentative nature of

this policy, Microsoft included a couple of disclaimers. First off, the company notes that it cannot monitor the entire Services and will make no attempt to do so. That suggests that Microsoft is not implementing live monitoring. However, it can access

stored and shared content when looking into alleged violations. This indicates that part of this policy will work off of a user report system. Microsoft also states that it can remove or refuse to publish content for any reason and reserves the

right to block delivery of a communication across services attached to this content policy. Additionally, the punishments for breaking this code of conduct now include the forfeiture of content licenses as well as Microsoft account balances associated

with the account. That means that the company could theoretically remove games from your console or seize money in your Microsoft account. |

| |

Judge sides with Google over the censorship of alt-right YouTube videos

|

|

|

| 28th March 2018

|

|

| See article from

thehill.com |

A federal judge has dismissed a lawsuit against Google filed by the conservative site PragerU whose YouTube videos had been censored by Google. U.S. District Court Judge Lucy Koh wrote in her decision that PragerU had failed to demonstrate that age

restrictions imposed on the company's videos are a First Amendment violation. PragerU filed its lawsuit in October, claiming that Google's decision to shunt some of its videos into the dead zone known as restricted mode, was motivated by a

prejudice against conservatives. The list of restricted videos included segments like The most important question about abortion, Where are the moderate Muslims? and Is Islam a religion of peace? In her decision, Koh dismissed the

PragerU's free speech claims, arguing that Google is not subject to the First Amendment because it's a private company and not a public institution. She wrote: Defendants are private entities who created their own

video-sharing social media website and make decisions about whether and how to regulate content that has been uploaded on that website.

|

| |

Rhode Island senator removes bill calling for $20 charge for internets users to access adult material

|

|

|

|

28th March 2018

|

|

| See article from xbiz.com

|

Rhode Island state Senator Frank Ciccone has pulled his bill that would have charged users $20 to unblock online porn, citing that dubious origins of the people who suggested th ebill to him. Ciccone said he made the decision to shelve SB 2584 , which

would have required mandatory porn filters on personal computers and mobile devices, after Time.com and the Associated Press published stories on the main campaigner, Chris Sevier, who has toted his ideas to several states. Sevier had publicized

that language in his template called the legislation the Elizabeth Smart Law after the girl who was kidnapped from her Utah home as a teenager in 2002. But Smart wanted nothing to do with Sevier idea, and she sent a cease-and-desist letter to

demand her name be removed from any promotion of the proposal. Ciccone later found out about Smart's letter and learned another thing about Sevier: He had a history of outlandish lawsuits, including one trying to marry his computer as a statement

against gay marriage. Besides filing similar lawsuits targeting gay marriage in Utah, Texas, Tennessee, South Carolina and Kentucky, Sevier was sentenced to probation after being found guilty four years ago of harassment threats against country singer

John Rich. |

| |

People deleting Facebook reveal just how wide your permissions you have granted to Facebook are

|

|

|

| 27th March 2018

|

|

| See article from alphr.com |

For years, privacy advocates have been shouting about Facebook, and for years the population as a whole didn't care. Whatever the reason, the ongoing Cambridge Analytica saga seems to have temporarily burst this sense of complacency, and people are

suddenly giving the company a lot more scrutiny. When you delete Facebook, the company provides you with a compressed file with everything it has on you. As well as every photo you've ever uploaded and details of any advert you've ever interacted

with, some users are panicking that Facebook seems to have been tracking all of their calls and texts. Details of who you've called, when and for how long appear in an easily accessible list -- even if you don't use Facebook-owned WhatsApp or Messenger

for texts or calls. Although it has been put around that Facebook have been logging calls without your permission, but this is not quite the case. In fact Facebook do actually follow Facebook settings and permissions, and do not track your calls

if you don't give permission. So the issue is people not realising quite how wide permissions are granted when you have ticked permission boxes. Facebook seemed to confirm this in a statement in response: You may have seen some recent reports that Facebook has been logging people's call and SMS (text) history without their permission. This is not the case. Call and text history logging is part of an opt-in feature for people using Messenger or Facebook Lite on Android. People have to expressly agree to use this feature. If, at any time, they no longer wish to use this feature they can turn it off.

So there you have it, if you use Messenger of Facebook Lite on Android you have indeed given the company permission to snoop on ALL your calls, not just those made through Facebook apps, |

| |

The BBFC consults about age verification for internet porn, and ludicrously suggests that the data oligarchs can be trusted with your personal identity data because they will follow 'best practice'

|

|

|

| 26th March 2018

|

|

| From bbfc.co.uk |

Your

data is safe with us.

We will follow 'best practices', honest!

|

The BBFC has launched its public consultation about its arrangements for censoring porn on the internet. The document was clearly written before the Cambridge Analytica data abuse scandal. The BBFC gullibility in accepting the word of age

verification providers and porn websites, that they will look after your data, now rather jars with what we see going on in the real world. After all European data protection laws allow extensive use of your data, and there are absolutely no laws

governing what foreign websites can do with your identity data and porn browsing history. I think that under the current arrangements, if a Russian website were to hand over identity data and porn browsing history straight over to the Kremlin

dirty tricks department, then as long as under 18s would be prohibited, then the BBFC would have to approve that website's age verification arrangements. Anyway there will be more debate on the subject over the coming month. The BBFC

writes: Consultation on draft Guidance on Age-Verification Arrangements and draft Guidance on Ancillary Service Providers Under section 14(1) of the Digital Economy Act 2017, all providers of online

commercial pornographic services accessible from the UK will be required to carry age-verification controls to ensure that their content is not normally accessible to children. This legislation is an important step in making the

internet a safer place for children. The BBFC was designated as the age-verification regulator under Part 3 of the Digital Economy Act 2017 on 21 February 2018. Under section 25 of the Digital Economy Act

2017, the BBFC is required to publish two sets of Guidance: Guidance on Age-verification Arrangements and Guidance on Ancillary Service Providers . The BBFC is now holding a public consultation on its draft Guidance

on Age-Verification Arrangements and its draft Guidance on Ancillary Service Providers. The deadline for responses is the 23 April 2018 . We will consider and publish responses before submitting final versions of the

Guidance to the Secretary of State for approval. The Secretary of State is then required to lay the Guidance in parliament for formal approval. We support the government's decision to allow a period of up to three months after the Guidance is formally

approved before the law comes into force, in order to give industry sufficient time to comply with the legislation. Draft Guidance on Age-verification Arrangements Under section 25 of the Digital

Economy Act 2017, the BBFC is required to publish: "guidance about the types of arrangements for making pornographic material available that the regulator will treat as complying with section 14(1)".

The draft Guidance on Age-Verification Arrangements sets out the criteria by which the BBFC will assess that a person has met with the requirements of section 14(1) of the Act. The draft guidance outlines good practice, such as

offering choice of age-verification solutions to consumers. It also includes information about the requirements that age-verification services and online pornography providers must adhere to under data protection legislation and the role and functions of

the Information Commissioner's Office (ICO). The draft guidance also sets out the BBFC's approach and powers in relation to online commercial pornographic services and considerations in terms of enforcement action. Draft

Guidance on Ancillary Service Providers Under section 25 of the Digital Economy Act 2017, the BBFC is required to publish: "guidance for the purposes of section 21(1) and (5) about the circumstances in which it will treat

services provided in the course of a business as enabling or facilitating the making available of pornographic material or extreme pornographic material". The draft Guidance on Ancillary Service Providers includes a

non-exhaustive list of classes of ancillary service provider that the BBFC will consider notifying under section 21 of the Act, such as social media and search engines. The draft guidance also sets out the BBFC's approach and powers in relation to online

commercial pornographic services and considerations in terms of enforcement action. How to respond to the consultation We welcome views on the draft Guidance in particular in relation to the

following questions: Guidance on Age-Verification Arrangements

Do you agree with the BBFC's Approach as set out in Chapter 2? Do you agree with the BBFC's Age-verification Standards set out in Chapter 3? Do you have any comments with

regards to Chapter 4?

The BBFC will refer any comments regarding Chapter 4 to the Information Commissioner's Office for further consideration. Draft Guidance on Ancillary Service Providers

Please submit all responses (making reference to specific sections of the guidance where relevant) and confidentiality forms as email attachments to: DEA-consultation@bbfc.co.uk The

deadline for responses is 23 April 2018 . We will consider and publish responses before submitting final versions of the Guidance to the Secretary of State for approval. Update: Intentionally

scary 31st March 2018. From Wake Me Up In Dreamland on twitter.com The failure to ensure data privacy/ protection in the Age Ver legislation is wholely intentional. Its intended to scare people away from adult material as a

precursor to even more web censorship in UK. |

| |

Netherlands voters reject the country's already implemented snooper's charter in a referendum

|

|

|

| 26th March 2018

|

|

| See article

from reuters.com |

Dutch voters have rejected a law that would give spy agencies the power to carry out mass tapping of Internet traffic. Dubbed the 'trawling law' by opponents, the legislation would allow spy agencies to install wire taps targeting an entire geographic

region or avenue of communication, store information for up to three years, and share it with allied spy agencies. The snooping law has already been approved by both houses of parliament. Though the referendum was non-binding prime minister Mark

Rutte has vowed to take the result seriously. |

| |

US Congress passes an unscrutinised bill to allow foreign countries to snoop on US internet connections, presumably so that GCHQ can pass the data back to the US, so evading a US ban on US snooping on US citizens

|

|

|

| 25th March 2018

|

|

| See article from eff.org |

On Thursday, the US House approved the omnibus government spending bill, with the unscrutinised CLOUD Act attached, in a 256-167 vote. The Senate followed up late that night with a 65-32 vote in favor. All the bill requires now is the president's

signature. U.S. and foreign police will have new mechanisms to seize data across the globe. Because of this failure, your private emails, your online chats, your Facebook, Google, Flickr photos, your Snapchat videos, your private

lives online, your moments shared digitally between only those you trust, will be open to foreign law enforcement without a warrant and with few restrictions on using and sharing your information. Because of this failure, U.S. laws will be bypassed on

U.S. soil. As we wrote before, the CLOUD Act is a far-reaching, privacy-upending piece of legislation that will: Enable foreign police to collect and wiretap people's communications from U.S. companies,

without obtaining a U.S. warrant.Allow foreign nations to demand personal data stored in the United States, without prior review by a judge.Allow the U.S. president to enter executive agreements that empower police in foreign nations that have weaker

privacy laws than the United States to seize data in the United States while ignoring U.S. privacy laws.Allow foreign police to collect someone's data without notifying them about it.Empower U.S. police to grab any data, regardless if it's a U.S.

person's or not, no matter where it is stored. And, as we wrote before, this is how the CLOUD Act could work in practice: London investigators want the private Slack messages of a Londoner they suspect of

bank fraud. The London police could go directly to Slack, a U.S. company, to request and collect those messages. The London police would not necessarily need prior judicial review for this request. The London police would not be required to notify U.S.

law enforcement about this request. The London police would not need a probable cause warrant for this collection. Predictably, in this request, the London police might also collect Slack messages written by U.S. persons

communicating with the Londoner suspected of bank fraud. Those messages could be read, stored, and potentially shared, all without the U.S. person knowing about it. Those messages, if shared with U.S. law enforcement, could be used to criminally charge

the U.S. person in a U.S. court, even though a warrant was never issued. This bill has large privacy implications both in the U.S. and abroad. It was never given the attention it deserved in Congress.

|

| |

Presumably the absence of similar government demands in the west suggests the existence of a quiet arrangement

|

|

|

| 25th March 2018

|

|

| See article from theverge.com

|

Encrypted messaging app Telegram has lost an appeal before Russia's Supreme Court where it sought to block the country's Federal Security Service (FSB) from gaining access to user data. Last year, the FSB asked Telegram to share its encryption keys

and the company declined, resulting in a $14,000 fine. Today, Supreme Court Judge Alla Nazarova upheld that ruling and denied Telegram's appeal. Telegram plans to appeal the latest ruling as well. If Telegram is found to be non-compliant, it could

face another fine and even have the service blocked in Russia, one of its largest markets. |

| |

Scottish court convicts YouTuber Count Dankula over Nazi dog joke

|

|

|

|

25th March 2018

|

|

| 22nd March 2018. See article from bbc.com

See

article from standard.co.uk

See

Youtube video: M8 Yur Dogs A Nazi from YouTube |

A man who filmed a pet dog giving Nazi salutes before putting the footage on YouTube has been convicted of committing a hate crime. Mark Meechan recorded his girlfriend's pug, Buddha, responding to statements such as gas the Jews and Sieg Heil by

raising its paw. It is interesting to note that the British press carefully avoided informing readers of Meechan's now well known Youtube name, Count Dankula. The original clip had been viewed more than three million times on YouTube. It is

still online on Youtube albeit in restricted mode where it is not included in searches and comments are not accepted. Meechan went on trial at Airdrie Sheriff Court where he denied any wrong doing. He insisted he made the video, which was posted

in April 2016, to annoy his girlfriend Suzanne Kelly, 29. But Sheriff Derek O'Carroll found him guilty of a charge under the Communications Act that he posted a video on social media and YouTube which O'Carroll claimed to be grossly offensive

because it was anti-semitic and racist in nature and was aggravated by religious prejudice. Meechan will be sentenced on 23rd April but has hinted in social media that court officials are looking into some sort of home arrest option. Comedian Ricky Gervais took to Twitter to comment on the case after the verdict. He tweeted:

A man has been convicted in a UK court of making a joke that was deemed 'grossly offensive'. If you don't believe in a person's right to say things that you might find 'grossly offensive', then you don't believe in

Freedom of Speech.

Yorkshire MP Philip Davies has demanded a debate on freedom of speech. Speaking in the House of Commons, hesaid: We guard our freedom of speech in this House very dearly

indeed...but we don't often allow our constituents the same freedoms. Can we have a debate about freedom of speech in this country - something this country has long held dear and is in danger of throwing away needlessly?

Andrea

Leadsom, leader of the Commons, responded that there are limits to free speech: I absolutely commend (Mr Davies) for raising this very important issue. We do of course fully support free speech ...HOWEVER...

there are limits to it and he will be aware there are laws around what you are allowed to say and I don't know the circumstances of his specific point, but he may well wish to seek an adjournment debate to take this up directly with ministers.

Comment: Freedom of expression includes the right to offend

See article from indexoncensorship.org Index on Censorship condemns the decision by a

Scottish court to convict a comedian of a hate crime for teaching his girlfriend's dog a Nazi salute. Mark Meechan, known as Count Dankula, was found guilty on Tuesday of being grossly offensive, under the UK's Communications Act

of 2003. Meechan could be sentenced with up to six months in prison and be required to pay a fine. Index disagrees fundamentally with the ruling by the Scottish Sheriff Appeals Court. According to the Daily Record, Judge Derek

O'Carroll ruled: The description of the video as humorous is no magic wand. This court has taken the freedom of expression into consideration. But the right to freedom of expression also comes with responsibility. Defending everyone's right to free

speech must include defending the rights of those who say things we find shocking or offensive Index on Censorship chief executive Jodie Ginsberg said: Numerous rulings by British and European courts have affirmed that freedom of

expression includes the right to offend. Defending everyone's right to free speech must include defending the rights of those who say things we find shocking or offensive. Otherwise the freedom is meaningless. One of the most

noted judgements is from a 1976 European Court of Human Rights case, Handyside v. United Kingdom, which found: Freedom of expression206is applicable not only to 'information' or 'ideas' that are favourably received or regarded as inoffensive or as a

matter of indifference, but also to those that offend, shock or disturb the State or any sector of the population.

Video Comment: Count Dankula has been found guilty See video from YouTube by The Britisher A powerful video response to another step in the

decline of British free speech. Offsite Comment : Hate-speech laws help only the powerful 23rd March 2018. See

article from spiked-online.com by Alexander Adams

Talking to the press after the judgement, Meechan said today, context and intent were completely disregarded. He explained during the trial that he was not a Nazi and that he had posted the video to annoy his girlfriend. Sheriff Derek O'Carroll declared

the video anti-Semitic and racist in nature. He added that the accused knew that the material was offensive and knew why it was offensive. The original investigation was launched following zero complaints from the public. Offensiveness apparently depends

on the sensitivity of police officers and judges. ...Read the full article from spiked-online.com

Offsite: Giving offence is both inevitable and often necessary in a plural society 25th March 2018. See

article from theguardian.com by Kenan Malik

Even the Guardian can find a space for disquiet about the injustice in the Scottish Count Dankula case. |

| |

US congress passes a supposed anti sex trafficking bill and immediately adult consensual sex workers are censored off the internet

|

|

|

| 23rd March 2018

|

|

| See article from eff.org |

It was a dark day for the Internet. The U.S. Senate just voted 97-2 to pass the Allow States and Victims to Fight Online Sex Trafficking Act (FOSTA, H.R. 1865), a bill that silences online speech by forcing Internet platforms to

censor their users. As lobbyists and members of Congress applaud themselves for enacting a law tackling the problem of trafficking, let's be clear: Congress just made trafficking victims less safe, not more. The version of FOSTA

that just passed the Senate combined an earlier version of FOSTA (what we call FOSTA 2.0) with the Stop Enabling Sex Traffickers Act (SESTA, S. 1693). The history of SESTA/FOSTA -- a bad bill that turned into a worse bill and then was rushed through

votes in both houses of Congress2 -- is a story about Congress' failure to see that its good intentions can result in bad law. It's a story of Congress' failure to listen to the constituents who'd be most affected by the laws it passed. It's also the

story of some players in the tech sector choosing to settle for compromises and half-wins that will put ordinary people in danger. Silencing Internet Users Doesn't Make Us Safer SESTA/FOSTA undermines Section 230, the most

important law protecting free speech online. Section 230 protects online platforms from liability for some types of speech by their users. Without Section 230, the Internet would look very different. It's likely that many of today's online platforms

would never have formed or received the investment they needed to grow and scale204the risk of litigation would have simply been too high. Similarly, in absence of Section 230 protections, noncommercial platforms like Wikipedia and the Internet Archive

likely wouldn't have been founded given the high level of legal risk involved with hosting third-party content.

The bill is worded so broadly that it could even be used against platform owners that don't know that their sites are being used for trafficking. Importantly, Section 230 does not shield platforms from liability under federal

criminal law. Section 230 also doesn't shield platforms across-the-board from liability under civil law: courts have allowed civil claims against online platforms when a platform directly contributed to unlawful speech. Section 230 strikes a careful

balance between enabling the pursuit of justice and promoting free speech and innovation online: platforms can be held responsible for their own actions, and can still host user-generated content without fear of broad legal liability.

SESTA/FOSTA upends that balance, opening platforms to new criminal and civil liability at the state and federal levels for their users' sex trafficking activities. The platform liability created by new Section 230 carve outs applies

retroactively -- meaning the increased liability applies to trafficking that took place before the law passed. The Department of Justice has raised concerns about this violating the Constitution's Ex Post Facto Clause, at least for the criminal

provisions. The bill also expands existing federal criminal law to target online platforms where sex trafficking content appears. The bill is worded so broadly that it could even be used against platform owners that don't know

that their sites are being used for trafficking. Finally, SESTA/FOSTA expands federal prostitution law to cover those who use the Internet to promote or facilitate prostitution. The Internet will become a less inclusive

place, something that hurts all of us. And if you had glossed over a little at the legal details, perhaps a few examples of the immediate censorship impact of the new law Immediate Chilling Effect on Adult Content

See article from xbiz.com

SESTA's passage by the U.S. Senate has had an immediate chilling effect on those working in the adult industry. Today, stories of a fallout are being heard, with adult performers finding their content being flagged and blocked, an

escort site that has suddenly becoming not available, Craigslist shutting down its personals sections and Reddit closing down some of its communities, among other tales. SESTA, which doesn't differentiate between sex trafficking

and consensual sex work, targets scores of adult sites that consensual sex workers use to advertise their work. And now, before SESTA reaches President Trump's desk for his guaranteed signature, those sites are scrambling to

prevent themselves from being charged under sex trafficking laws. It's not surprising that we're seeing an immediate chilling effect on protected speech, industry attorney Lawrence Walters told XBIZ. This was predicted as the

likely impact of the bill, as online intermediaries over-censor content in the attempt to mitigate their own risks. The damage to the First Amendment appears palpable.

Today, longtime city-by-city escort service website, CityVibe.com, completely disappeared, only to be replaced with a message, Sorry, this website is not available. Tonight, mainstream classified site

Craigslist, which serves more than 20 billion page views per month, said that it has dropped personals listings in the U.S. Motherboard reported today that at least six porn performers have complained that files have been

blocked without warning from Google's cloud storage service. It seems like all of our videos in Google Drive are getting flagged by some sort of automated system, adult star Lilly Stone told Motherboard. We're not even really getting notified of it, the

only way we really found out was one of our customers told us he couldn't view or download the video we sent him. Another adult star, Avey Moon was trying to send the winner of her Chaturbate contest his prize -- a video

titled POV Blowjob -- through her Google Drive account, but it wouldn't send. Reddit made an announcement late yesterday explaining that the site has changed its content policy, forbidding transactions for certain goods and

services that include physical sexual contact. A number of subreddits regularly used to help sex workers have been completedly banned. Those include r/Escorts , r/MaleEscorts and r/SugarDaddy .

|

| |

Turkish parliament extends the TV censor's control to internet TV

|

|

|

| 23rd March 2018

|

|

| See article from voanews.com |

Turkey's parliament on Thursday passed legislation widening government control of the internet, one of the last remaining platforms for critical and independent reporting. The TV, and now internet censor RTUK is controlled by representatives of the

ruling AKP party. Under the new legislation, internet broadcasters will have to apply for a license from the censor. And of course risk being turned down because the government doesn't like them. Websites that do not obtain the required licence

will be blocked. Turkish authorities have already banned more than 170,000 websites, but observers point out that Turks have become increasingly savvy on the internet, using various means to circumvent restrictions, such as by using virtual

private networks (VPN). But authorities are quickly becoming adept, too. Fifteen VPN providers are currently blocked by Turkey, cyber rights expert Akdeniz said. It's becoming really, really difficult for standard internet users to access banned

content. It's not a simple but a complex government machinery now seeking to control the internet. |

| |

Egypt set to introduce new law to codify internet censorship currently being arbitrarily implemented

|

|

|

| 23rd March 2018

|

|

| See article from

advox.globalvoices.org by Netizen Report Team

|

Egyptian parliamentarians will soon review a draft anti-cybercrime law that could codify internet censorship practices into national law. While the Egyptian government is notorious for censoring websites and platforms on

national security grounds, there are no laws in force that explicitly dictate what is and is not permissible in the realm of online censorship. But if the draft law is approved, that will soon change. Article 7 of the

anti-cybercrime law would give investigative authorities the right to order the censorship of websites whenever evidence arises that a website broadcasting from inside or outside the state has published any phrases, photos or films, or any promotional

material or the like which constitute a crime, as set forth in this law, and poses a threat to national security or compromises national security or the national economy. Orders issued under Article 7 would need to be approved by a judge within 72 hours

of being filed. Article 31 of the law holds internet service providers responsible for enacting court-approved censorship orders. ISP personnel that fail to comply with orders can face criminal punishment, including steep fines (a

minimum of 3 million Egyptian pounds, or 170,000 US dollars) and even imprisonment, if it is determined that their refusal to comply with censorship orders results in damage to national security or the death of one or more persons.

In an interview with independent Cairo-based media outlet Mada Masr, Association of Freedom of Thought and Expression legal director Hassan al-Azhari argued that this would be impossible to prove in practice. The law also addresses issues of personal data privacy, fraud, hacking, and communications that authorities fear are spreading terrorist and extremist ideologies.

|

| |

|

|

|

| 23rd

March 2018

|

|

|

Jigsaw, the Alphabet-owned Google sibling, will now offer VPN software that you can easily set up on your own server in the cloud See article from

wired.com |

| |

Informative YouTube history lesson about Hitler presented in a light, but neutral observational tone banned for UK viewers

|

|

|

|

22nd March 2018

|

|

| See article from reddit.com

See

Hitler OverSimplified Part 1 from YouTube

See Hitler OverSimplified Part 2

from YouTube |

| | UK

|

| | outside UK |

OverSimplified is an informative factual series of short history lessons is an animated and light tone. However the commentary is unbiased, observational and neutral. No sane and rational human would consider these videos as tight wind

propaganda or the like. Yet Google has banned them from UK eyes with the message 'This content is not available on this country domain'. Google does not provide a reason fr the censorship but there are two likely candidates.

- Maybe Google's unintelligent AI systems cannot detect the difference between a history lesson about Hitler from a video inciting support for Hitler's ideas/

- Maybe someone flagged the video for unfair reasons and Google's commercial

expediency means its cheaper and easier to uphold the ban rather than get a moderator to spend a few minutes watching it.

Either way the cost of the censorship is that it will achieve nothing towards the censors were seeking, but it will alienate those that believe in free speech and democracy. |

| |

|

|

|

|

22nd March 2018

|

|

|

The Register investigates touching on the dark web, smut monopolies and moral outrage See article from theregister.co.uk

|

| |

The Digital Policy Alliance, a group of parliamentarians, waxes lyrical about its document purporting to be a code of practice for age verification, and then charges 90 quid + VAT to read it

|

|

|

| 19th March

2018

|

|

| See article from theregister.co.uk

See

article from shop.bsigroup.com |

The Digital Policy Alliance is a cross party group of parliamentarians with associate members from industry and academia. It has led efforts to develop a Publicly Available Specification (PAS 1296) which was published on 19 March. Crossbench British peer Merlin Hay, the Earl of Erroll, said:

We need to make the UK a safe place to do business, he said. That's why we're producing a British PAS... that set out for the age check providers what they should do and what records they keep.

The

document is expected to include a discussion on the background to age verification, set out the rules in accordance with the Digital Economy Act, and give a detailed look at the technology, with annexes on anonymity and how the system should work.

This PAS will sit alongside data protection and privacy rules set out in the General Data Protection Regulation and in the UK's Data Protection Bill, which is currently making its way through Parliament. Hay explained:

We can't put rules about data protection into the PAS206 That is in the Data Protection Bill, he said. So we refer to them, but we can't mandate them inside this PAS 203 but it's in there as 'you must obey the law'... [perhaps] that's been too subtle for

the organisations that have been trying to take a swing at it.

What Hay didn't mention though was that all of this 'help' for British industry would come with a hefty £ 90 + VAT price tag for a 60

page document. |

| |

Facebook censors France's iconic artwork, Liberty Leading the People

|

|

|

| 19th March 2018

|

|

| See article from citizen.co.za

See

article from news.artnet.com |

Facebook has admitted a ghastly mistake after it banned an advert featuring French artist Eugène Delacroix's famous work, La Liberté guidant le peuple, because it depicts a bare-breasted woman. The 19th-century masterpiece was featured in

an online campaign for a play showing in Paris when it was blocked on the social networking site this week, the play's director Jocelyn Fiorina said: A quarter of an hour after the advert was launched, it was blocked,

with the company telling us we cannot show nudity.

He then posted a new advert with the same painting with the woman's breasts covered with a banner saying censored by Facebook, which was not banned. As always when Facebook's

shoddy censorship system is found lacking, the company apologised profusely for its error. |

| |

A teacher wins a rather symbolic court victory in France over Facebook who banned Gustave Courbet's 1866 painting, The Origin of the World.

|

|

|

| 19th March

2018

|

|

| Thanks to Nick

See article from news.artnet.com

|

A teacher wins a rather symbolic court victory in France over Facebook, who banned Gustave Courbet's 1866 painting L'Origine du monde (The Origin of the World). After a seven year legal battle, a French court has ruled that Facebook was wrong

to close the social media account of educator Frédéric Durand without warning after he posted an image of Gustave Courbet 's 1866 painting The Origin of the World . While the court agreed that Facebook was at fault, the social media giant does not

have to pay damages. The court ruled that there was no damage because Durand was able to open another account. Durand was not impressed, he said: We are refuting this, we are making an appeal, and we will argue

in the court of appeal that, actually, there was damage.

Durand's lawyer, Stéphane Cottineau, explained that when the social network deleted Durand's account in 2011, he lost his entire Facebook history, which he didn't use for social

purposes, but rather to share his love of art, particularly of street art and the work of contemporary living painters. |

| |

Whilst the EU ramps up internet censorship, particularly people's criticism of its policies, the Council of Europe calls for internet censorship to be transparent and limited to the minimum necessary by law

|

|

|

| 18th March 2018

|

|

| See article from linx.net |

The Council of Europe is an intergovernmental body entirely separate from the European Union. With a wider membership of 47 states, it seeks to promote democracy, human rights and the rule of law, including by monitoring adherence to the rulings of the

European Court of Human Rights. Its Recommendations are not legally binding on Member States, but are very influential in the development of national policy and of the policy and law of the European Union. The Council of Europe has published a

Recommendation to Member States on the roles and responsibilities of Internet intermediaries. The Recommendation declares that

access to the Internet is a precondition for the ability effectively to exercise fundamental human rights, and seeks to protect users by calling for greater transparency, fairness and due process when interfering with content. The Recommendations'

key provisions aimed at governments include:

- Public authorities should only make "requests, demands or other actions addressed to internet intermediari es that interferes with human rights and fundamental freedoms" when prescribed by law. This means they should therefore avoid asking

intermediaries to remove content under their terms of service or to make their terms of service more restrictive.

- Legislation giving powers to public authorities to interfere with Internet content should clearly define the scope of those powers

and available discretion, to protect against arbitrary application.

- When internet intermediaries restrict access to third-party content based on a State order, State authorities should ensure that effective redress mechanisms are made available

and adhere to applicable procedural safeguards.

- When intermediaries remove content based on their own terms and conditions of service, this should not be considered a form of control that makes them liable for the third-party content for which

they provide access.

- Member States should consider introducing laws to prevent vexatious lawsuits designed to suppress users free expression, whether by targeting the user or the intermediary. In the US, these are known as " anti-SLAPP laws

".

|

| |

But will a porn site with an unadvertised Russian connection follow these laws, or will it pass on IDs and browsing histories straight to the 'dirt digging' department of the KGB?

|

|

|

| 17th March 2018

|

|

| See article from theregister.co.uk

|

The Open Rights Group, Myles Jackman and Pandora Blake have done a magnificent job in highlighting the dangers of mandating that porn companies verify the age of their customers. Worst case scenario In the worst case scenario,

foreign porn companies will demand official ID from porn viewers and then be able to maintain a database of the complete browsing history of those officially identified viewers. And surely much to the alarm of the government and the newly

appointed internet porn censors at the BBFC, then this worst case scenario seems to be the clear favourite to get implemented. In particular Mindgeek, with a near monopoly on free porn tube sites, is taking the lead with its Age ID scheme. Now for

some bizarre reason, the government saw no need for its age verification to offer any specific protection for porn viewers, beyond that offered by existing and upcoming data protection laws. Given some of the things that Google and Facebook do with

personal data then it suggests that these laws are woefully inadequate for the context of porn viewing. For safety and national security reasons, data identifying porn users should be kept under total lock and key, and not used for any commercial

reason whatsoever. A big flaw But there in lies the flaw of the law. The government is mandating that all websites, including those based abroad, should verify their users without specifying any data protection requirements beyond

the law of the land. The flaw is that foreign websites are simply not obliged to respect British data protection laws. So as a topical example, there would be nothing to prevent a Russian porn site (maybe not identifying itself as Russian) from

requiring ID and then passing the ID and subsequent porn browsing history straight over to its dirty tricks department. Anyway the government has made a total pigs ear of the concept with its conservative 'leave it to industry to find a solution'

approach'. The porn industry simply does not have the safety and security of its customers at heart. Perhaps the government should have invested in its own solution first, at least the national security implications may have pushed it into at least

considering user safety and security. Where we are at As mentioned above campaigners have done a fine job in identifying the dangers of the government plan and these have been picked up by practically all newspapers. These seem to

have chimed with readers and the entire idea seems to be accepted as dangerous. In fact I haven't spotted anyone, not even 'the think of the children' charities pushing for 'let's just get on with it'. And so now its over to the authorities to try and

convince people that they have a safe solution somewhere. The Digital Policy Alliance

Perhaps as part of a propaganda campaign to win over the people, parliament's Digital Policy Alliance are just about to publish guidance on age verification policies. The alliance is a cross party group that includes, Merlin Hay, the Earl of Erroll, who

made some good points about privacy concerns whilst the bill was being forced through the House of Lords. He said that a Publicly Available Specification (PAS) numbered 1296 is due to be published on 19 March. This will set out for the age check

providers what they should do and what records they keep. The document is expected to include a discussion on the background to age verification, set out the rules in accordance with the Digital Economy Act, and give a detailed look at the

technology, with annexes on anonymity and how the system should work. However the document will carry no authority and is not set to become an official British standard. He explained: We can't put rules about

data protection into the PAS... That is in the Data Protection Bill, he said. So we refer to them, but we can't mandate them inside this PAS -- but it's in there as 'you must obey the law'...

But of course Hay did not mention that

Russian websites don't have to obey British data protection law. And next the BBFC will have a crack at reducing people's fears Elsewhere in the discussion, Hay suggested the British Board of Film and Internet Censorship could

mandate that each site had to offer more than one age-verification provider, which would give consumers more choice. Next the BBFC will have a crack at minimising people's fears about age verification. It will publish its own guidance document

towards the end of the month, and launch a public consultation about it. |

| |

|

|

|

| 17th March

2018

|

|

|

As Social Media Companies Try To Stay Ahead Of European Lawmakers. By Tim Cushing See

article from techdirt.com |

| |

The EU's disgraceful censorship machines are inevitably aimed at a lot wider censorship than that cited of copyrighted movies and music, and github is fighting back

|

|

|

| 16th March 2018

|

|

| See article from blog.github.com |

The EU is considering a copyright proposal that would require code-sharing platforms to monitor all content that users upload for potential copyright infringement (see the EU Commission's proposed Article 13 of the Copyright

Directive ). The proposal is aimed at music and videos on streaming platforms, based on a theory of a "value gap" between the profits those platforms make from uploaded works and what copyright holders of some uploaded works receive. However,

the way it's written captures many other types of content, including code. We'd like to make sure developers in the EU who understand that automated filtering of code would make software less reliable and more

expensive--and can explain this to EU policymakers--participate in the conversation. Why you should care about upload filters Upload filters (" censorship machines ") are

one of the most controversial elements of the copyright proposal, raising a number of concerns, including:

- Privacy : Upload filters are a form of surveillance, effectively a "general monitoring obligation" prohibited by EU law

- Free speech : Requiring platforms to monitor

content contradicts intermediary liability protections in EU law and creates incentives to remove content

- Ineffectiveness : Content detection tools are flawed (generate false positives, don't fit all kinds of

content) and overly burdensome, especially for small and medium-sized businesses that might not be able to afford them or the resulting litigation

Upload filters are especially concerning for software developers given that:

- Software developers create copyrightable works--their code--and those who choose an open source license want to allow that code to be shared

- False positives (and negatives) are especially

likely for software code because code often has many contributors and layers, often with different licensing for different components

- Requiring code-hosting platforms to scan and automatically remove content could

drastically impact software developers when their dependencies are removed due to false positives

|

| |

|

|

|

| 15th March 2018

|

|

|

European Parliament has been nobbled by a pro censorship EU commissioner See article from boingboing.net |

| |

|

|

|

| 15th March

2018

|

|

|

Hateful tweets are the price we pay for internet freedom. By Ella Whelan See article from spiked-online.com

|

| |

EFF and 23 Groups Tell Congress to Oppose the CLOUD Act

|

|

|

| 14th March 2018

|

|

| See article from eff.org |

EFF and 23 other civil liberties organizations sent a letter to Congress urging Members and Senators to oppose the CLOUD Act and any efforts to attach it to other legislation. The CLOUD Act (

S. 2383 and H.R. 4943 ) is a

dangerous bill that would tear away global privacy protections by allowing police in the United States and abroad to grab cross-border data without following the privacy rules of where the data is stored. Currently, law enforcement requests for

cross-border data often use a legal system called the Mutual Legal Assistance Treaties, or MLATs. This system ensures that, for example, should a foreign government wish to seize communications stored in the United States, that data is properly secured

by the Fourth Amendment requirement for a search warrant. The other groups signing the new coalition letter against the CLOUD Act are Access Now, Advocacy for Principled Action in Government, American Civil Liberties Union,

Amnesty International USA, Asian American Legal Defense and Education Fund (AALDEF), Campaign for Liberty, Center for Democracy & Technology, CenterLink: The Community of LGBT Centers, Constitutional Alliance, Defending Rights & Dissent, Demand

Progress Action, Equality California, Free Press Action Fund, Government Accountability Project, Government Information Watch, Human Rights Watch, Liberty Coalition, National Association of Criminal Defense Lawyers, National Black Justice Coalition, New

America's Open Technology Institute, OpenMedia, People For the American Way, and Restore The Fourth. The CLOUD Act allows police to bypass the MLAT system, removing vital U.S. and foreign country privacy protections. As we

explained in our earlier letter to Congress, the CLOUD Act would:

Allow foreign governments to wiretap on U.S. soil under standards that do not comply with U.S. law; Give the executive branch the power to enter into foreign agreements without Congressional approval

or judicial review, including foreign nations with a well-known record of human rights abuses; Possibly facilitate foreign government access to information that is used to commit human rights abuses, like torture; and Allow foreign governments to obtain information that could pertain to individuals in the U.S. without meeting constitutional standards.

You can read more about EFF's opposition to the CLOUD Act here .

The CLOUD Act creates a new channel for foreign governments seeking data about non-U.S. persons who are outside the United States. This new data channel is not governed by the laws of where the data is stored. Instead, the foreign

police may demand the data directly from the company that handles it. Under the CLOUD Act, should a foreign government request data from a U.S. company, the U.S. Department of Justice would not need to be involved at any stage. Also, such requests for

data would not need to receive individualized, prior judicial review before the data request is made. The CLOUD Act's new data delivery method lacks not just meaningful judicial oversight, but also meaningful Congressional

oversight, too. Should the U.S. executive branch enter a data exchange agreement--known as an "executive agreement"--with foreign countries, Congress would have little time and power to stop them. As we wrote in our letter:

"[T]he CLOUD Act would allow the executive branch to enter into agreements with foreign governments--without congressional approval. The bill stipulates that any agreement negotiated would go into effect 90 days after Congress

was notified of the certification, unless Congress enacts a joint resolution of disapproval, which would require presidential approval or sufficient votes to overcome a presidential veto." And under the bill, the

president could agree to enter executive agreements with countries that are known human rights abusers. Troublingly, the bill also fails to protect U.S. persons from the predictable, non-targeted collection of their data. When

foreign governments request data from U.S. companies about specific "targets" who are non-U.S. persons not living in the United States, these governments will also inevitably collect data belonging to U.S. persons who communicate with the

targeted individuals. Much of that data can then be shared with U.S. authorities, who can then use the information to charge U.S. persons with crimes. That data sharing, and potential criminal prosecution, requires no probable cause warrant as required

by the Fourth Amendment, violating our constitutional rights. The CLOUD Act is a bad bill. We urge Congress to stop it, and any attempts to attach it to must-pass spending legislation.

|

| |

|

|

|

| 14th March

2018

|

|

|

The more countries try to restrict virtual private networks, the more people use them. By Josephine Wolff See

article from slate.com |

| |

|

|

|

| 14th March 2018

|

|

|

A commendably negative take from The Sun. A legal expert has revealed the hidden dangers of strict new porn laws, which will force Brits to hand over personal info in exchange for access to XXX videos See

article from thesun.co.uk |

| |

Is it just me or is Matt Hancock just a little too keen to advocate ID checks just for the state to control 'screen time'. Are we sure that such snooping wouldn't be abused for other reasons of state control?

|

|

|

| 13th March 2018

|

|

| See article from alphr.com |

It's no secret the UK government has a vendetta against the internet and social media. Now, Matt Hancock, the secretary of state for Digital, Culture, Media and Sport (DCMS) wants to push that further, and enforce screen time cutoffs for UK children on

Facebook, Instagram and Snapchat. Talking to the Sunday Times, Hancock explained that the negative impacts of social media need to be dealt with, and he laid out his idea for an age-verification system to apply more widely than just porn viewing.

He outlined that age-verification could be handled similarly to film classifications, with sites like YouTube being restricted to those over 18. The worrying thing, however, is his plans to create mandatory screen time cutoffs for all children.

Referencing the porn restrictions he said: People said 'How are you going to police that?' I said if you don't have it, we will take down your website in Britain. The end result is that the big porn sites are introducing this globally, so we are leading

the way. ...Read the full article from alphr.com Advocating internet censorship See

article from gov.uk Whenever politicians peak of 'balance' it inevitably means that the

balance will soon swing from people's rights towards state control. Matt Hancock more or less announced further internet censorship in a speech at the Oxford Media Convention. He said: Our schools and our curriculum have a

valuable role to play so students can tell fact from fiction and think critically about the news that they read and watch. But it is not easy for our children, or indeed for anyone who reads news online. Although we have robust

mechanisms to address disinformation in the broadcast and press industries, this is simply not the case online. Take the example of three different organisations posting a video online. If a broadcaster

published it on their on demand service, the content would be a matter for Ofcom. If a newspaper posted it, it would be a matter for IPSO. If an individual published it online, it would be untouched by

media regulation. Now I am passionate in my belief in a free and open Internet ....BUT... freedom does not mean the freedom to harm others. Freedom can only exist within a framework. Digital

platforms need to step up and play their part in establishing online rules and working for the benefit of the public that uses them. We've seen some positive first steps from Google, Facebook and Twitter recently, but even tech

companies recognise that more needs to be done. We are looking at the legal liability that social media companies have for the content shared on their sites. Because it's a fact on the web that online platforms are no longer just

passive hosts. But this is not simply about applying publisher or broadcaster standards of liability to online platforms. There are those who argue that every word on every platform should be the full legal

responsibility of the platform. But then how could anyone ever let me post anything, even though I'm an extremely responsible adult? This is new ground and we are exploring a range of ideas... including

where we can tighten current rules to tackle illegal content online... and where platforms should still qualify for 'host' category protections. We will strike the right balance between addressing issues

with content online and allowing the digital economy to flourish. This is part of the thinking behind our Digital Charter. We will work with publishers, tech companies, civil society and others to establish a new framework...

A change of heart of press censorship It was only a few years ago when the government were all in favour of creating a press censor. However new fears such as Russian interference and fake news has turned the mainstream

press into the champions of trustworthy news. And so previous plans for a press censor have been put on hold. Hancock said in the Oxford speech: Sustaining high quality journalism is a vital public policy goal. The scrutiny, the

accountability, the uncovering of wrongs and the fuelling of debate is mission critical to a healthy democracy. After all, journalists helped bring Stephen Lawrence's killers to justice and have given their lives reporting from

places where many of us would fear to go. And while I've not always enjoyed every article written about me, that's not what it's there for. I tremble at the thought of a media regulated by the state in a

time of malevolent forces in politics. Get this wrong and I fear for the future of our liberal democracy. We must get this right. I want publications to be able to choose their own path, making decisions like how to make the most

out of online advertising and whether to use paywalls. After all, it's your copy, it's your IP. The removal of Google's 'first click free' policy has been a welcome move for the news sector. But I ask the question - if someone is

protecting their intellectual property with a paywall, shouldn't that be promoted, not just neutral in the search algorithm? I've watched the industry grapple with the challenge of how to monetise content online, with different

models of paywalls and subscriptions. Some of these have been successful, and all of them have evolved over time. I've been interested in recent ideas to take this further and develop new subscription models for the industry.

Our job in Government is to provide the framework for a market that works, without state regulation of the press. |

| |

|

|

|

| 13th March 2018

|

|

|

Tim Berners-Lee, founder of the the World Wide Web, laments the power grab of the internet by commercial giants See article from

alphr.com |

| |

A few more details from the point of view of British adult websites

|

|

|

| 12th March 2018

|

|

| See article from wired.co.uk |

|

| |

|

|

|

|

12th March 2018

|

|

|

And a little humorous criticism seems sure to warrant a police visit See article

from theglobeandmail.com |

| |

The Government announces a new timetable for the introduction of internet porn censorship, now set to be in force by the end of 2018

|

|

|

| 11th March 2018

|

|

| See press release from gov.uk

|

In a press release the DCMS describes its digital strategy including a delayed introduction of internet porn censorship. The press release states: The Strategy also reflects the Government's ambition to make the internet

safer for children by requiring age verification for access to commercial pornographic websites in the UK. In February, the British Board of Film Classification (BBFC) was formally designated as the age verification regulator. Our

priority is to make the internet safer for children and we believe this is best achieved by taking time to get the implementation of the policy right. We will therefore allow time for the BBFC as regulator to undertake a public consultation on its draft

guidance which will be launched later this month. For the public and the industry to prepare for and comply with age verification, the Government will also ensure a period of up to three months after the BBFC guidance has been

cleared by Parliament before the law comes into force. It is anticipated age verification will be enforceable by the end of the year.

|

| |

|

|

|

|

11th March 2018

|

|

|

The BBFC takes its first steps to explain how it will stop people from watching internet porn See article from bbfc.co.uk |

| |

UK censorship minister seems in favour of state controls on screen time for children

|

|

|

| 10th March 2018

|

|

| See article from stv.tv

|

Children could have time limits imposed when they are on social media sites, the secretary of state for digital, culture, media and censorship has suggested. Matt Hancock told The Times that an age-verification system could be used to restrict screen

time. He aid: There is a genuine concern about the amount of screen time young people are clocking up and the negative impact it could have on their lives. For an adult I wouldn't want to

restrict the amount of time you are on a platform but for different ages it might be right to have different time cut-offs.

|

| |

Germany looks to create an appeals body to contest false censorship caused by the undue haste required for take downs

|

|

|

| 9th March 2018

|

|

| See article from csmonitor.com

|

A German law requiring social media companies like Facebook and Twitter to remove reported hate speech without enough time to consider the merits of the report is set to be revised following criticism that too much online content is being blocked. The

law, called NetzDG for short, is an international test case and how it plays out is being closely watched by other countries considering similar measures. German politicians forming a new government told Reuters they want to add an amendment to

help web users get incorrectly deleted material restored online. The lawmakers are also pushing for social media firms to set up an independent body to review and respond to reports of offensive content from the public, rather than leaving to the

social media companies who by definition care more about profits than supporting free speech. Such a system, similar to how video games are policed in Germany, could allow a more considered approach to complex decisions about whether to block

content, legal experts say. Facebook, which says it has 1,200 people in Germany working on reviewing posts out of 14,000 globally responsible for moderating content and account security, said it was not pursuing a strategy to delete more than

necessary. Richard Allan, Facebook's vice president for EMEA public policy said: People think deleting illegal content is easy but it's not. Facebook reviews every NetzDG report carefully and with legal expertise,

where appropriate. When our legal experts advise us, we follow their assessment so we can meet our obligations under the law.

Johannes Ferchner, spokesman on justice and consumer protection for the Social Democrats and one of the

architects of the law said: We will add a provision so that users have a legal possibility to have unjustly deleted content restored.

Thomas Jarzombek, a Christian Democrat who helped refine the

law, said the separate body to review complaints should be established, adding that social media companies were deleting too much online content. NetzDG already allows for such a self-regulatory body, but companies have chosen to go their own way

instead. According to the coalition agreement, both parties want to develop the law to encourage the establishment of such a body. |

| |

South Africa's lower house passes bill to apply traditional pre-vetting censorship to commercial content on the internet

|

|

|

| 9th March 2018

|

|

| 7th March 2018. See article from ewn.co.za

|

South Afric's Film and Publications Amendment Bill will apply traditional pre-vetting style censorship t everything posted on the internet in the country. The National Assembly has approved the bill in a vote of 189 in favour, with 35 against and no